New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

2018 Tencent World AI Weiqi Competition #1554

Comments

|

gcp should hide the best weight in a safe place, so that it won't be copied by other program. |

|

at least, train a 20*256 or more that beat elf, otherwise is too weak to take the Competition |

|

Is it possible to prepare different weights for different opponents? For example, if elf is not good at ladder, we can exploit the defect choose one specific weight to deal with it. |

I would use the next branch instead of some experimental one (even if it did not seem much worse). There have been some suggestions to fiddle with max threads on machines with a lot of GPU. |

We have no way of doing this right now (*). Indeed if someone enters something based on ELF weights directly, LZ would be expected to lose to it. (*) We have ~230k ELF self-play games, but this is probably not enough to train a super-ELF from scratch. |

|

are there any method to compute the percentage of a weight is base on another? |

|

@gcp Do you attend the competition youself? We all expect you could come to China(at least this is my thought, I'm confident that LZ can enter the final) |

|

@Tsun-hung |

|

is that true? There are 11 AIs from all over China, Japan, Korea, Europe and the United States that will compete in the competition, including fineart, octopus, golarxy, and northern lights from China, AQ, AYa, Raynz from Japan, Dolbaram and Baduki from Korea, and Leela Zero from Belgium. and ELF OpenGo from the United States. 共有遍布中日韩和欧美的11款AI将出征本次大赛,包括来自中国的绝艺、章鱼、星阵、北极光,来自日本的AQ、AYa、Raynz,来自韩国的Dolbaram、Baduki,来自比利时的 Leela Zero 和来自美国的ELF OpenGo。 http://bbs.flygo.net/bbs/forum.php?mod=viewthread&tid=106979&extra=page%3D1 |

|

confirmed http://weiqi.qq.com/news/9438.html |

|

CITIC champion CNY 450,000 ... even higher, though the total prize money 800,000 is lower. https://share.yikeweiqi.com/gonews/detail/id/18281/type/QQReadCount |

|

Octopus and Northern Lights... New Go AIs keep coming out of nowhere. |

|

Seems quite likely that ELF OpenGo will beat at least Leela Zero... |

|

A top 4 finish for LZ would be great (considering how strong the competition is) , and you never know, with a bit of luck... |

|

AQ, AYa, Raynz , Dolbaram and Baduki should be weaker than LZ. Only Fine Art and Elf are clearly stronger. |

|

pytorch/ELF#62 (comment) |

|

live log for the preliminaries of the game. June 23, 10:00 |

|

at least gcp had better use a hide weight stronger than all public lz weight ,include 20*256, except elf. |

|

@diadorak "Only Fine Art and Elf are clearly stronger." And Golaxy. |

|

http://weiqi.qq.com/news/9439.html Tian Yuandong: We have been encouraging everyone to use it since it was open source. This one and a half months I believe that many teams have found ways to improve. For example, adding some extra conditions to prevent ladder bugs will make OpenGo stronger. This competition will enable everyone to use our platform and that is to achieve the goal. The team still has many tasks to complete. We will not spend too much effort on the outcome of this game, so we do not expect OpenGo to get a high ranking. Of course, we still hope it can win. 田渊栋:我们开源后一直鼓励大家使用,这一个半月以来我相信很多团队已经找到改进的方案,比如说加一些额外的条件防止出现征子bug,这些都会让OpenGo变得更强。这次参赛,能让大家用我们的平台,那就是达到目的了。团队还有很多任务要完成,我们不会为这次比赛的胜负花太多力气在上面,所以我们并不期望OpenGo可以获得很高的名次。当然啦,我们还是希望它能赢。 |

|

PARANOiA-Rebirth in CGOS is probably AQ. |

|

http://txwq.qq.com/act/mgame/index.html webpage Chinese |

|

The official English name of "Northern Lights" (北极光) is Aurora. It's a registered participant of ICGA as well: Octopus is Leela Master with customized engine. |

|

Elf is run by Tencent... Since their program does not support multiple GPUs, Elf could indeed be a weaker one in the competition. pytorch/ELF#62 The ELF OpenGo team is not officially participating but the tournament organizers have our permission to use the Fox bot as they wish." |

|

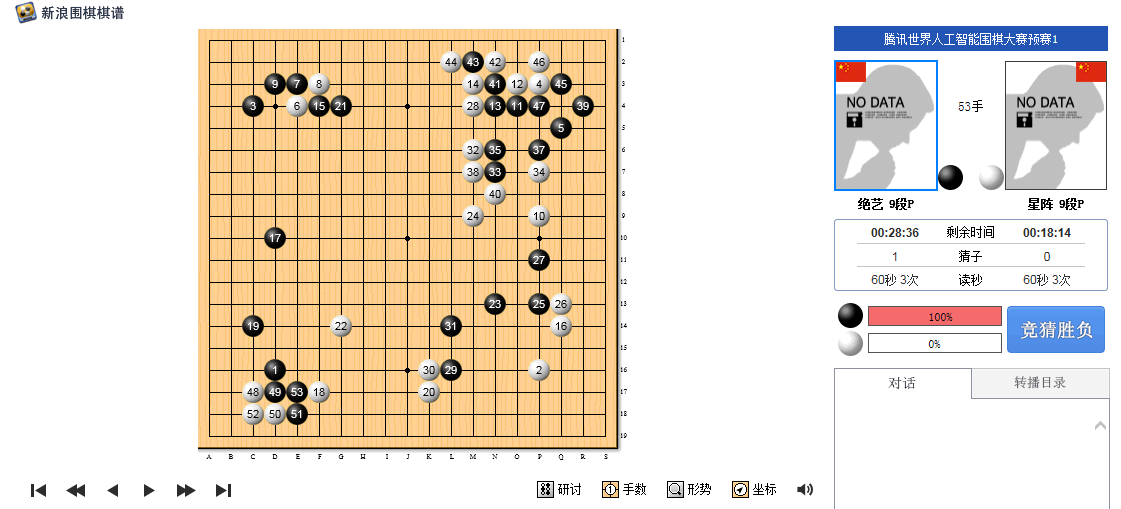

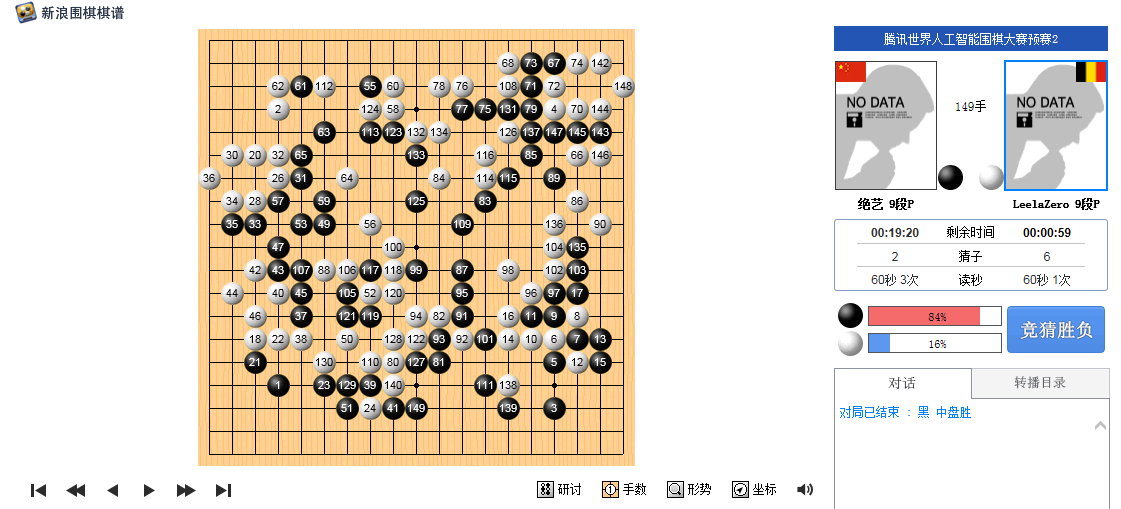

the match began |

|

LZ won the first game against AQ. |

|

round2 lz (w) vs fineart (b) b+ |

|

http://sports.sina.com.cn/go/2018-06-23/doc-iheirxye5187956.shtml and they use a loptop in the game? |

As if this is easy! |

|

For the record, for the Fuzhou (WAIGO) tournament, I recevied about 20000 RMB in prize money. For this tournament (Tencent), I have not received any news regarding prize money. |

|

I suggest that Tencent should write up a report of this tournament on the Internet. In English. Searching for it is like trying to find an obscure secret that might exist, perhaps, in a foreign language. Or it might not. Tencent is a $300 billion (USD) technology company, don't they know how to put up a web page? Why keep the world in the dark about their big contest? Don't they want publicity? I theorize that there might be some big website, behind the Great Firewall of China, in Chinese characters, that is full of articles about what happened at the "2018 Tencent World AI Weiqi (Go) Competition". Or maybe there isn't one. It's really hard to tell. I can tell that there is very little in English (and even less in Spanish, or French). Tencent, please let everyone know what happened. |

|

Please don't be panic. We are negotiating with Tencent committee now. They will give us result next week. |

|

Many commentaries of matches at http://foxwq.com/news/index/p/1.html (in Chinese) |

|

I received about ~6000 EUR prize money today from this tournament. |

|

Nice, definitly deserved ! If it's not too personnal, do you have a plan with it or just keep it for personal/family use ? |

|

Congratulations!

…On Mon, Nov 12, 2018 at 9:47 PM Gian-Carlo Pascutto < ***@***.***> wrote:

I received about ~6000 EUR prize money today from this tournament.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#1554 (comment)>,

or mute the thread

<https://github.com/notifications/unsubscribe-auth/AVBaeCi6H0-J2gVQ10hvmeCOzFL0wBLaks5uub49gaJpZM4UnP9L>

.

|

|

transparency is most appreciated, as well as personal effort |

|

@gcp Great news, of course the work yourself and all the contributors have done in the last year to make LZ so strong is priceless. Thanks so much. |

The RTX 2080 Ti doesn't look so attractive from perf/price/watts/availability point of view right now. I'm considering getting an RTX 2080 instead and seeing if we can make the training work with fp16 (inference already works). That would be a nice speedup over the GTX 1080 Ti at lower power usage. Other than that I plan to privately contact some of the people who made the largest code contributions and offer them a cut. It's probably impossible to do right by everyone here but I will use my best judgement and give it a shot. |

Your reasoning is sound =)

At the same time, the price seems to be dropping. |

|

fp16 is definitely interesting if possible |

I've got an RTX card - how does fp16 work with inference? I seem to get similar performance using single/half precision. Do I have to do a specific tuning for half or something else? It detects the card as not having fp16 compute support. |

We support both of these through fp16 compute support (during inference). The RX cards in theory should only benefit a little because they only save register space in fp16 mode, but empirically my RX560 actually becomes almost twice as fast in fp16/half mode. Vega should benefit a lot as it has fp16 compute, but I remember early reviews saying it is disabled in OpenCL (Edit: Some Googling shows newer drivers do have it enabled). For training it all depends on how good TensorFlow's support for AMD cards is. |

If the OpenCL driver doesn't expose fp16 support then we can't use it. Edit: The rumors I read say that NVIDIA blocks this on consumer cards, but that it works on V100. If that's true then RTX cards are currently not very interesting for running LZ, though they may still be useful for training. |

|

@gcp What you found agree with what I heard (that Vega can use fp16 compute now but RTX 20xx can't), except that V100 actually doesn't work yet. That's why people wants cuda/cudnn etc. so dearly... |

What does "doesn't work yet" mean? This? If it still doesn't then that's interesting, I found a claim the V100 did but RTX doesn't, but if you're right then even that is bullshit. Should be easy to confirm by checking the clinfo on a card and seeing if cl_khr_fp16 or 'half' capability is reported. I can confirm that the RTX cards don't have drivers that are capable of fp16 in OpenCL: So, NVIDIA wants everyone to rewrite to CUDA (exclusive to their platform) because they can't be arsed to write proper drivers. Apple wants everyone to rewrite to Metal (exclusive to their platform) because they can't be arsed to write proper drivers. I really look forward to the next platform. |

Update:original post:I asked a friend to run clinfo with V100 but Google Cloud won't assign one to him at the moment, so here is result on P100 instead (truncated for some reason, but the Device Extensions are shown): |

|

@gcp @alreadydone it uses a custom image provided by microsoft azure with everything preinstalled (gpu driver, cuda, cudnn, opencl) with clinfo in datascience 16.04 preinstalled (testworking) : then during tuning |

|

@alreadydone @gcp i already had the log from brand new ubuntu 18.04 + gpu driver latest version ppa with tesla V100 in test1000 (same script as test81) : with clinfo in ubuntu 18.04 + gpu drivers ppa + all latest packages

http://m.uploadedit.com/bbtc/15425314021.txt

clinfo after reboot (ubuntu 18.04 + gpu drivers ppa + all latest packages : test1000) : tuning after reboot (ubuntu 18.04 + gpu drivers ppa + all latest packages : test1000) : note : http://m.uploadedit.com/bbtc/1542529282479.txt note 2 : http://m.uploadedit.com/bbtc/1542531465359.txt for some reason the datascience image seems quite slower than my own install with ppa note 3 :

|

|

@gcp, spend whatever cut you feel you owe me in any way you think will best contribute to the future development of Leela Zero. |

OK, noted! |

http://weiqi.qq.com/special/109.html

IV. Contest System:

June 23-24: Preliminary contest (at site) as 5-7 rounds (subject to number of actual applicant teams) of Swiss-system contest. The top 8 enters the circle;

Early July: Top 8 circle (online). Seven rounds of circle contest on Tencent Foxwq platform (use black and white stones respectively for two games in each round). Top 4 enters site final;

Late July: Final (at site). The winner of 5-game contest in semi-final enters the final; the winner of 7-game contest in the final will be the champion.

(I) Contest Awards

Champion: CNY 400,000;

Runner-up: CNY 200,000;

3rd - 4th place: CNY 120,000;

5th - 8th place: CNY 60,000;

9th - 16th place: CNY 10,000.

@gcp I'm writing to enquire suggestion about

The text was updated successfully, but these errors were encountered: