Abstract

The extraordinary success of Machine Learning (ML) in many complex heuristic fields has promoted its introduction in more analytical engineering fields, improving or substituting many established approaches in Computer Aided Engineering (CAE), and also solving long-standing problems. In this chapter, we first review the ideas behind the most used ML approaches in CAE, and then discuss a variety of different applications which have been traditionally addressed using classical approaches and that now are increasingly the focus of ML methods.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

- Computer aided engineering

- Machine learning

- Classification

- Identification

- Prediction

- Supervised learning

- Unsupervised learning

- Training

- Evaluation

- Validation

- Regression

- Decision trees

- Support vector machines

- Reduction

- Genetic algorithms

- Neural networks

- Physics-constrained procedures

- Digital twins

- Deep neural operators

- Constitutive

- Multiscale modeling

- Surrogate models

- Finite element methods

- Solids

- Structural mechanics

- Fluids

- Manufacturing

- Design

1.1 Introduction

The purpose of Machine Learning algorithms is to learn automatically from data employing general procedures. Machine Learning (ML) is today ubiquitous due to its success in many current daily applications such as face recognition (Hassan and Abdulazeez 2021), speech (Malik et al. 2021) and speaker recognition (Hanifa et al. 2021), credit card fraud detection (Ashtiani and Raahemi 2021; Nayak et al. 2021), spam detection (Akinyelu 2021), and cloud security (Nassif et al. 2021). ML governs our specific Google searches and the advertisements we receive (Kim et al. 2001) based on our past actions, along many other interactions (Google cloud 2023). It even anticipates what we will type or what we will do. And, of course, ML schemes also rank us, scientists (Beel and Gipp 2009).

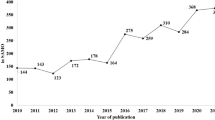

The explosion of applications of ML came with the increased computer power and also the ubiquitous presence of computers, cell phones, and other “smart” devices. These gave ML the spotlight to foster its widespread use to many other areas in which it had less presence. The success in many extremely useful areas such as speech and face recognition has contributed to this interest (Marr 2019). Today, ML may help you (through web services) to find a job, obtain a loan, find a partner, obtain an insurance, and, among others, also helps in the medical and legal services (Duarte 2018). Of course, ML raises many ethical issues, some of which are described, for example in Stahl (2021). However, the discovered power and success of ML in many areas have made a very important impact on our society and, remarkably, on how many problems are addressed. No wonder, the number of ML papers published in almost all fields has sharply increased in the last 10 years, with a rate following approximately Moore’s law (Frank et al. 2020).

Machine Learning is considered a part of Artificial Intelligence (AI) (Michalski et al. 2013). In essence, ML algorithms are general procedures and codes that, with the information from datasets, can give predictions for a wide range of problems (see Fig. 1.1). The main difference to classical programs is that the classical programs are developed for specific applications, like in Computer Aided Engineering, which is the topic of this chapter, to solve specific differential equations in integral forms. An example is how finite elements have been developed. In contrast, ML procedures are for much more general applications, being used almost unchanged in problems apparently unconnected as predicting the evolutions of stocks, spam filtering, face recognition, typing prediction, pharmacologic design, or materials selection. ML methods are different also from Expert Systems because these are based on fixed rules or fixed probability structures. ML methods excel when useful information needs to be obtained from massive amounts of data.

Overall Machine Learning (ML) process and contrast between efficiency and generality of the method. Hyperparameters are user-defined parameters which account for the type of problem, whereas parameters are optimized for best prediction. ML may be used for prediction and for classification. it is also often used as a tool for dimensionality reduction

Of course, generality comes usually with a trade-off regarding efficiency for a specific problem solution (Fig. 1.1), so the use of ML for the solution of simple problems, or for problems which can be solved by other more specific procedures, is typically inappropriate. Furthermore, ML is used when predictions are needed for problems which have not or cannot be accurately formulated; that is, when the variables and mathematical equations governing the problem are not fully determined—but physics-informed approaches with ML are now also much focused on, Raissi et al. (2019). Nonetheless, ML codes and procedures are still mostly used as general “black boxes”, typically employing standard implementations available in free and open-source software repositories. A number of input variables are then employed and some specific output is desired, which together comprises the input-to-output process learned and adjusted from known cases or from the structure of the input data. Some of these free codes are Scikit-learn (Pedregosa et al. 2011) (one of the best-known), Microsoft Cognitive Toolkit (Xiong et al. 2018), TensorFlow (Dillon et al. 2017) (which is optimal for Cuda-enabled Graphic Processing Unit (GPU) parallel ML), Keras (Gulli and Pal 2017), OpenNN (Build powerful models 2022), and SystemML (Ghoting et al. 2011), just to name a few. Other proprietary software, used by big companies, are AWS Machine Learning Services from Amazon (Hashemipour and Ali 2020), Cloud Machine Learning Engine from Google (Bisong 2019a), Matlab (Paluszek and Thomas 2016; Kim 2017), Mathematica (Brodie et al. 2020; Rodríguez and Kramer 2019), etc. Moreover, many software offerings have libraries for ML, and are often used in ML projects like Python (NumPy, Bisong 2019b, Scikit-learn, Pedregosa et al. 2011, and Tensorly, Kossaifi et al. 2016; see review in Stančin and Jović 2019), C++, e.g., Kaehler and Bradski (2016), Julia (a recent Just-In-Time (JIT) compiling language created with science and ML in mind, Gao et al. 2020; Innes 2018; Innes et al. 2019), and the R programming environment (Lantz 2019; Bischl et al. 2016; Molnar et al. 2018); see also Raschka and Mirjalili (2019), King (2009), Gao et al. (2020), Bischl et al. (2016). These software offerings also use many earlier published methods for standard computational tasks such as mathematical libraries (like for curve fitting, the solution of linear and nonlinear equations, the determination of eigenvalues and eigenvectors or Singular Value Decompositions), and computational procedures for optimization (e.g., the steepest descent algorithms). The offerings also use earlier established statistical and regression algorithms, interpolation, clustering, domain slicing (e.g., tessellation algorithms), and function approximations.

ML derives from the conceptually fuzzy (uncertain, non-deterministic) learning approach of AI. AI is devoted to mimicking the way the human learning process works—namely, the human brain, through the establishment of neurological connections based on observations, can perform predictions, albeit mostly only qualitative, of new events. And then, the more experience (data) has been gathered, the better are the predictions through experience reinforcements and variability of observations. In addition, classification is another task typically performed by the human brain. We classify photos, people, experiences, and so on, according to some common features: we search continuously for features that allow us to group and separate out things so that we can establish relations of outcomes to such groups. Abundant data, data structuring, and data selection and simplification are crucial pieces of this type of “fuzzy” learning and, hence, of ML procedures.

Based on these observations, neural network concepts were rather early developed by McCulloch and Pitts in 1943 and Hebb in 1949 (Hebb 2005), who wrote the well-known sentence “Cells that fire together, wire together”, meaning that the firing of one cell determines the actions of subsequent cells. While Hebb’s forward firing rule is unstable through successive epochs, it was the foundation for Artificial Neural Network (NN) theories. Probably due to the difficulties in implementations and computational cost in using NN, the widespread use of NN was delayed until the 1990s. The introduction of improvements in the procedures for backpropagation and optimization, as well as improvements in data acquisition, information retrieval, and data mining, made possible the application of NNs to real problems. Today, NNs are very flexible and are the basis of many ML techniques and applications. However, this delay also facilitated the appearance and use of other ML-related methods as expert systems and decision trees, and a myriad of pattern recognition and decision-making approaches.

Today, whenever a complex problem is found, especially if there is no sound theory or reliable formulation to solve it, ML is a valuable tool to try. In many cases, the result is successful and indeed even a good understanding of the behavior of the problem and the variables involved may be obtained. While the introduction of ML procedures into Computer Aided Engineering (CAE) took a longer time than in other areas, probably because for many problems the governing equations and effective computational procedures were known, ML is finally also focused on addressing complex and computationally intensive CAE solutions. In this chapter, we overview some of the procedures and applications of Machine Learning employed in CAE.

1.2 Machine Learning Procedures Employed in CAE

As mentioned, ML is often considered to be a subset of AI (Michalski et al. 2013; Dhanalaxmi 2020; Karthikeyan et al. 2021), although often ML is also recognized as a separate field itself which only has some intersection with AI (Manavalan 2020; Langley 2011; Ongsulee 2017). Deep Learning (DL) is a subset of ML. Although the use of NNs is the most common approach to address CAE problems and ML problems in general, there are many other ML techniques that are being used. We review below the fundamental aspects of these techniques.

1.2.1 Machine Learning Aspects and Classification of Procedures

Our objective in this section is to focus on various fundamental procedures commonly used in ML schemes.

1.2.1.1 Classification, Identification, and Prediction

ML procedures are mainly employed for three tasks: classification, identification (both may broadly be considered as classification), and prediction. An example of classification is the labeling of e-mails as spam or not spam (Gaurav et al. 2020; Crawford et al. 2015). Examples of identification are the identification of a type of behavior or material from some stress–strain history or from force signals in machining (Denkena et al. 2019; Penumuru et al. 2020; Bock et al. 2019), the identification of a nanostructure from optical microscopy (Lin et al. 2018), the identification of a person from a set of images (Ahmed et al. 2015; Ding et al. 2015; Sharma et al. 2020), and the identification of a sentence from some fuzzy input. Examples of prediction are the prediction of behavior of a material under some deformation pattern (Ye et al. 2022; Ibragimova et al. 2021; Huang et al. 2020), the prediction of a sentence from some initial words (Bickel et al. 2005; Sordoni et al. 2015), and the prediction of the trajectory of salient flying objects (Wu et al. 2017; Fu et al. 2020). Of course, there are some AI procedures which may not only belong to one of these categories, as the identification or prediction of governing equations in physics (Rai and Sahu 2020; Raissi and Karniadakis 2018). Clustering ML procedures are typically used for classification, whereas regression ML procedures are customarily used for prediction.

1.2.1.2 Expected and Unexpected Data Relations

Another relevant distinction is between ML approaches and Data Mining (DM). ML focuses on using known properties of data in classification or in prediction, whereas DM focuses on the discovery of new unknown properties or relations of data. However, ML, along information systems, is often considered part of DM (Adriaans and Zantinge 1997). The overlap of DM and ML is seen in cases like the discovery of unknown relations or in finding optimum state variables which may be, for example, given in physical equations. Note that ML typically assumes that we know beforehand the existence of relations (e.g., which are the relevant variables and what is the type of output we expect), but the purpose of DM is to research the existence of perhaps unexpected relations from raw data.

1.2.1.3 Statistical and Optimization Approaches within ML

Many procedures use, or are derived from, statistics, and in particular probability theory (Murphy 2012; Bzdok et al. 2018). In a similar manner, ML employs mostly optimization procedures (Le et al. 2011). The main conceptual difference between these theories and ML is the purpose of the developments. In the case of statistics, the purpose is to obtain inference or characteristics of the population such as the distribution and the mean (which of course could be used thereafter for predictions); see Fig. 1.2. In the case of ML, the purpose is to predict new outcomes, often without the need for statistically characterizing populations, and incorporate these outcomes in further predictions (Bzdok et al. 2018). ML approaches often support predictions on models. ML optimizes parameters for obtaining the best predictions as quantified by a cost function, and the values of these parameters are optimized also to account for the uncertainty in the data and in the predictions. ML approaches may use statistical distributions, but those are not an objective and their evaluation is often numerical (ML is interested in predictions). Also, while ML uses optimization procedures to obtain values of parameters, the objective is not to obtain the “optimum” solution to fit data, but a parsimonious model giving reliable predictions (e.g., to avoid overfitting).

1.2.1.4 Supervised, Unsupervised, and Reinforced Learning

It is typical to classify the ML procedures in supervised, unsupervised, semi-supervised, and reinforced learning (Raschka 2015; Burkov 2019, 2020).

In supervised learning, samples \(\{s_i\equiv \{\textbf{x}_i,y_i \}\}_{\{i=1,\ldots ,n\}}\in S\) with vectors of features \(\textbf{x}_i\) are labeled with a known result or label \(y_i\). The label may be a class, a number, a matrix, or other. The purpose of the ML approach in this case is (typically) to create a model that relates those known outputs \(y_i\) to the dataset samples through some combination of the \(j=1,\ldots ,N\) features \(x_{j(i)}\equiv x_{ji}\) of each sample i. \(x_{j(i)}\) are also referred to as data, variables, measurements, or characteristics, depending on the context or field of application. An example of a ML procedure could be to relate the seismic vulnerability of a building (label) as a function of features like construction type, age, size, location, building materials, maintenance, etc. Rosti et al. (2022), Zhang et al. (2019), Ruggieri et al. (2021). The ML purpose is here to be able to learn the vulnerability of buildings from known vulnerabilities of other buildings. The labeling could have been obtained from experts or from past earthquakes. Supervised learning is based on sufficient known data, and we want to determine predictions in the nearby domain. In essence, we can say that “supervised learning is a high-dimensional interpolation problem” (Mallat 2016; Gin et al. 2021). We note that supervised learning may be improved with further data when available, since it is a dynamic learning procedure, mimicking the human brain.

In unsupervised learning the samples \(s_i\) are unlabeled \((s_i\equiv \{\textbf{x}_i \})\), so the purpose is to label the samples from learning similitudes and common characteristics in the features of the samples; it is usually an instance-based learning. Typical unsupervised ML approaches are employed in clustering (e.g., classifying the structures by type in our previous example), dimensionality reduction (detecting which features are less relevant to the output label, for example because all or most samples have it, like doors in buildings), and outlier detection (e.g., in detecting abnormal traffic in the Internet, Salman et al. 2020, 2022; Salloum et al. 2020) for the case when very few samples have that feature. These approaches are similar to data mining.

Semi-supervised learning is conceptually a combination of the previous approaches but with specific ML procedures. In essence it is a supervised learning approach in which there are few labeled samples (output known) but many more unlabeled samples (output unknown), even sometimes with incomplete features, with some missing characteristics, which may be filled in by imputation techniques (Lakshminarayan et al. 1996; Ramoni and Sebastiani 2001; Liu et al. 2012; Rabin and Fishelov 2017). The point here is that by having many more samples with unassigned features, we can determine better the statistical distributions of the data and the possible significance of the features in the result, resulting in an improvement over using only labeled data for which the features have been used to determine the label. For example, in our seismic vulnerability example, imagine that one feature is that the building has windows. Since almost all buildings have windows, it is unlikely that this feature is relevant in determining the vulnerability (it will give little Information Gain; see below). On the contrary, if \(20\%\) of the buildings have a steel structure, and if the correlation is positive regarding the (lack of) vulnerability, it is likely that the feature is important in determining the vulnerability.

There is also another type of ML seldom used in CAE, which is reinforced learning (or reward-based learning). In this case, the computer develops and changes actions to learn a policy depending on the feedback, i.e. rewards which themselves modify the subsequent actions by maximizing the expected reward. It has some common concepts to supervised learning, but the purpose is an action, instead of a prediction. Hence, it is a typical ML approach in control dynamics (Buşoniu et al. 2018; Lewis and Liu 2013) with applications, for example, in the aeronautical industry (Choi and Cha 2019; Swischuk and Allaire 2019; He et al. 2021).

1.2.1.5 Data Cleaning, Ingestion, Augmentation, Curation, Data Evaluation, and Data Standardization

Data is the key to ML procedures, so datasets are usually large and obtained in different ways. The importance of data requires that the data is presented to the ML method (and maintained if applicable) in optimal format. To reach that goal requires many processes which often also involve ML techniques. For example, in a dataset there may be data which are not in a logical range, or with missing entries, hence they need to be cleaned. ML techniques may be used to determine outliers in datasets, or assign values (data imputation) according to the other features and labels present in other samples in the dataset. Different dataset formats such as qualitative entries like “good”, “fair”, or “bad”, and quantitative entries like “1–9”, may need to be converted (encoded) to standardized formats, using also ML algorithms (e.g., assigning “fair” to a numerical value according to samples in the dataset). This is called data ingestion. ML procedures may also need to have data distributions determined, that is, data evaluated to learn if a feature follows a normal distribution or if there is a consistent bias, and also standardize data according to min–max values or the same normal distribution, for example to avoid numerical issues and give proper weight to different features. In large dynamic databases, much effort is expended for the proper maintenance of the data so it remains useful, using many operations such as data cleaning, organization, and labeling. This is called data curation.

Another aspect of data treatment is the creation of a training set, a validation set, and a test set from a database (although often test data refers to both the validation and the test set, in particular when only one model is considered). The purpose of the training set is to train the ML algorithm: to create the “model”. The purpose of the validation set is to evaluate the models in an independent way from the training set, for example to see which hyperparameters are best suited, or even which ML method is best suited. Examples may be the number of neurons in a neural network or the smoothing hyperparameter in splines fitting; different smoothing parameters yield different models for the same training set, and the validation set helps to select the best values, obtaining the best predictions but avoiding overfitting. Recall that ML is not interested in the minimum error for the training set, but in a predictive reliable model. The test set is used to evaluate the performance of the final selected model from the overall learning process. An accurate prediction of the training set with a poor prediction of the test set is an indicator of overfitting: we have reached an unreliable model. A model with similar accuracy in the training and test sets is a good model. The training set should not be used for assessing the accuracy of the model because the parameters and their values have been selected based on these data and hence overfitting may not be detected. However, if more data is needed for training, there are techniques for data augmentation, typically performing variations, transformations, or combinations of other data (Shorten and Khoshgoftaar 2019). A typical example is to perform transformations of images (rotations, translations, changes in light, etc., Inoue 2018). Data augmentation should be used with care, because there is a risk that the algorithms correlate unexpected features with outputs: samples obtained by augmentation may have repetitive features because in the end they are correlated samples. These repetitive features may mislead the algorithms so they identify the feature as a key aspect to correlate to the output (Rice et al. 2020). An example is a random spot in an image that is being used for data augmentation. If the spot is present in the many generated samples, it may be correlated to the output as an important feature.

Modified from Latorre and Montáns (2020)

Using B-splines to fit hyperelastic stress–strain data. Regression may be performed in nominal stress–stretch (\(P-\lambda )\) axes, or in true stress–strain \(\sigma -E\) axes; note that the result is different. While usual test representation in hyperelasticity is in the (\(P-\lambda )\) axes, regression is preferred in \(\sigma -E\) because of the symmetry of tension and compression in logarithmic strains). B-spline fit of experimental data with a overfitting and b proper fit using regularization based on stability conditions.

1.2.1.6 Overfitting, Regularization, and Cross-Validation

Overfitting and model complexity are important aspects in ML; see Fig. 1.3. Given that the data has errors and often some stochastic nature, a model which gives zero error in the training data does not mean that it is a good model; indeed it is usually a hint that it is the opposite: a presentation of overfitting (Fig. 1.3a). Best models are those less complex (parsimonious) models that follow Occam’s razor. They are as simple as possible but still have a great predictive power. Hence, the less parameters, the better. However, it is often difficult to simplify ML models to have few “smart” parameters, so model reduction and regularization techniques are often used as a “no-brainer” remedy for overfitting. Typical regularization (“smoothing”) techniques are Least Absolute Shrinkage Selection Operator, sparse or L1 regularization (LASSO) (Xu et al. 2008) and L2, called Ridge (Tikhonov 1963), or noise (Bishop 1995) regularization, or regression. The LASSO scheme “shrinks” the less important features (hence is also used for feature selection), whereas the L2 scheme gives a more even weight to them. The combination of both is known as elastic net regularization (Zou and Hastie 2005).

Training and test sets: k-fold generation of training and validation sets from data. Number of data: 9, data for training and model selection: 6, data for final validation test (test set): 3, number of folds for model selection: 3, data in each fold: 2, number of models: 3 (k = 3). Sometimes, the validation test is also considered as test set. The 10-fold cross-validation is a common choice

Model selection taking into account model fitness and including a penalization for model complexity is often performed by employing the Akaike Information Criterion (AIC). Given a collection of models arising from the available data, the AIC allows to compare these models among them, so as to help select the best fitted model. In essence, the AIC not only estimates the relative amount of information lost by each model but also takes into account its parsimony. In other words, it deals with the trade-off between overfitting and underfitting by computing

where p is the number of parameters of the model (complexity penalty) and \(\mathfrak {L}\) is the maximum of the likelihood function of the model, the joint probability of the observed data as a function of the p parameters of the model (see next section). Therefore, the chosen model should be the one with the minimum AIC. In essence, the AIC penalizes the number of parameters to choose the best model—and that is the model not only with as few parameters as possible but also with a large probability of reproducing the data using these parameters.

Dividing the data into two sets, one for training and one for validation, very often produces overfitting, especially for small datasets. To avoid the overfitting, the method of k-fold cross-validation is frequently used. In this process, the data is divided into k datasets. \(k-1\) of them are used to train the model and the remaining one is used for validation. This process is repeated k times, by employing each of the possible datasets for validation. The final result is given by the arithmetic mean of the k results (Fig. 1.4). Leave-one-out Cross-Validation (LOOCV) is the special case where the number of folds is the same as the number of samples, so the test set has only one element. While LOOCV is expensive in general (Meijer and Goeman 2013), for the linear case it is very efficient because all the errors are obtained simultaneously with a single fit through the so-called hat matrix (Angelov and Stoimenova 2017).

1.2.2 Overview of Classical Machine Learning Procedures Used in CAE

The schemes we present in this section are basic ingredients of many ML algorithms.

1.2.2.1 Simple Regression Algorithms

The simplest ML approach is much older than the ML discipline: linear and nonlinear regression. In the former case, the purpose is to compute the weights \(\textbf{w}\) and the offset b of the linear model \(\tilde{y}\equiv f(\textbf{x})=\textbf{w}^T \textbf{x}+b\), where \(\textbf{x}\) is the vector of features. The parameters \(\textbf{w},b\) are obtained through the minimization of the cost function (MSE: Mean Squared Error)

with respect to them, which in this case is the average of the loss function \(\mathcal {L}_i=(\tilde{y}_i-y_i)^2\), where the \(y_i\) are the known values, \(\tilde{y}_i=f(\textbf{x}_i;\{\textbf{w},b\})\) are the predictions, and the subindex i refers to sample i, so \(\textbf{x}_i\) is the vector of features of that sample. Of course, in linear regression, the parameters are obtained simply by solving the linear system of equations resulting from the quadratic optimization problem. Other regression algorithms are similar, as for example spline, B-spline regressions, or P-spline (penalized B-splines) regressions, used in nonlinear mechanics (Crespo et al. 2017; Latorre and Montáns 2017) or used to perform efficiently an inverse of functions which does not have an analytical solution (Benítez and Montáns 2018; Eubank 1999; Eilers and Marx 1996). In all these cases, smoothing techniques are fundamental to avoid overfitting.

While it is natural to state the regression problem as a minimization of the cost function, it may be also formulated in terms of the likelihood function \(\mathfrak {L}\). Given some training data \((y_i,\textbf{x}_i)\) (with labels \(y_i\) for data \(\textbf{x}_i\)), we seek the parameters \(\textbf{w}\) (for simplicity we now include b in the set \(\textbf{w}\)) that minimize the cost function (e.g., MSE); or equivalently we seek the set \(\textbf{w}\) which maximizes the likelihood \(\mathfrak {L}(\textbf{w}|(y,\mathbf {x)})=p(y|\textbf{x};\textbf{w})\) for those parameters \(\textbf{w}\) to give the probability representation for the training data, which is the same as the probability of finding the data \((y,\textbf{x})\) given the distribution by \(\textbf{w}\). The likelihood is the “probability” by which a distribution (characterized by \(\textbf{w}\)) represents all given data, whereas the probability is that of finding data if the distribution is known. Assuming data to be identically distributed and independent such that \(p(y_{1},y_2,\ldots , y_n|\textbf{x}_1,\textbf{x}_2,\ldots , \textbf{x}_n;\textbf{w})=p(y_{1}|\textbf{x}_1;\textbf{w})p(y_2|\textbf{x}_2;\textbf{w})\ldots p(y_{n}|\textbf{x}_n;\textbf{w})\), the likelihood is

or

Choosing the linear regression \(\tilde{y}=\textbf{w}^T\textbf{x}\) (including b and 1 respectively in \(\textbf{w}\) and \(\textbf{x}\)), and a normal distribution of the prediction, obtained by assuming a zero-centered normal distribution of the error

it is immediate to verify that the maximization of the log-likelihood in Eq. (1.4) is equivalent to minimizing the MSE in Eq. (1.2) (regardless of the value of the variance \(\sigma ^2\)).

A very typical regression used in ML is logistic regression (Kleinbaum et al. 2002; Hosmer Jr et al. 2013), for example to obtain pass/fail (1/0) predictions. In this case, a smooth predictor output \(y\in [0,1]\) can be interpreted as a probability \(p(y=1|\textbf{x}):=p(\textbf{x})\). The Bernoulli distribution (which gives p for \(y_{i}=1\) and \((1-p)\) for \(y_{i}=0\)), or equivalently

describes this case for a given \(\textbf{x}_i\), as it is immediate to check. The linear regression is assigned to the logit function to convert the \((-\infty ,\infty )\) range into the desired probabilistic [0, 1] range

The logit function is the logarithm of the ratio between the odds of \(y=1\) (which are p) and \(y=0\) (which are \((1-p)\)). The probability \(p(\textbf{x})\) may be factored out from Eq. (1.7) as

which is known as the sigmoid function. Neural Networks frequently use logistic regression with the sigmoid model function where the parameters are obtained through the minimization of the proper cost function, or through the maximization of the likelihood. In this latter case, the likelihood of the probability distribution in Eq. (1.6) is

where \(y_i\) are the labels (with value 1 or 0) and \(p(\textbf{x}_i)\) are their (sigmoid-based) probabilistic predicted values given by Eq. (1.8) for the training data, which are a function of the parameters \(\textbf{w}\). The maximization of the log-likelihood of Eq. (1.9) for the model parameters gives the same solution as the minimization of the cross-entropy

Another type of regression often used in ML is Kernel Regression. A Kernel is a positive-definite, typically non-local, symmetric weighting function \(K(\textbf{x}_i,\textbf{x})=K(\textbf{x},\textbf{x}_i)\), centered in the attribute, with unit integral. The idea is similar to the use of shape functions in finite element formulations. For example, the Gaussian Kernel is

where \(\sigma \) is the bandwidth or smoothing parameter (deviation), and the weight for sample i is \(w_i(\textbf{x})=K_i(\textbf{x})/\sum _{j=1}^n K_j(\textbf{x})\). The predictor, using the weights from the kernel, is \(f(\textbf{x})=\sum _{i=1}^n w_i(\textbf{x})y_i\) (although kernels may be used also for the labels). The cost function to determine \(\sigma ^{2}\) or other kernel parameters may be

where the last summation term is the LOOCV, which excludes sample i from the set of predictions (recall that there are n different \(f^{)i(}\) functions). Equation (1.12) focuses in essence on the minimum squared error for the solution. As explained below, kernels are also employed in Support Vector Machines to deal with nonlinearity and in dimensionality reduction of nonlinear problems to reduce the space.

1.2.2.2 Naïve Bayes

Naïve Bayes (NB) schemes are frequently used for classification (spam e-mail filtering, seismic vulnerability, etc.), and may be Multinomial NB or Gaussian NB. In both cases the probability theory is employed.

NB procedures operate as follows. From the training data, the prior probabilities for the different classes are computed, e.g., vulnerable or safe, p(V) and p(S), respectively, in our seismic vulnerability example. Then, for each feature, the probabilities are computed within each class, e.g., the probability that a vulnerable (or safe) structure is made of steel, \(p(\text {steel}|V)\) (or \(p(\text {steel}|S)\)). Finally, given a sample outside the training set, the classification is obtained from the largest probability considering the class and the features present in the sample, e.g., \(p(V)p(\text {steel}|V)p(\ldots |V)\ldots \) or \(p(S)p(\text {steel}|S)p(\ldots |V)\ldots \), and so on. Gaussian NB are applied when the features have continuous (Gaussian) distributions, as for example the height of a building in the seismic vulnerability example. In this case the feature conditioned probabilities \(p(\cdot |V)\) are obtained from the respective normal distributions. Logarithms of the probabilities are frequently used to avoid underflows.

1.2.2.3 Decision Trees (DT)

Decision trees are nonparametric. The simplest and well-known decision tree generator is the Iterative Dichotomiser 3 (ID3) algorithm, a greedy strategy. The scheme is used in the “guess who?” game and is also in essence the idea behind the root-finding bisection method or the Cuthill–McKee renumbering algorithm. The objective is, starting from one root node, to select at each step the feature and condition from the data that maximizes the Information Gain G (maximizes the benefit of the split), resulting in two (or more) subsequent leaf nodes. For example, in a group of people, the first optimal condition (maximizing the benefit of the split) is typically if the person is male or female, resulting in the male leaf and in the female leaf, each with \(50\%\) of the population, and so on. In seismic vulnerability, it could be if the structure is made of masonry, steel, wood, or Reinforced Concrete (RC). The gain G is the difference between the information entropies before (H(S), parent entropy) and after the split given by the feature at hand j. Let us denote by \(x_{j(i)}\) the feature j of sample i, by \(\textbf{x}_i\) the array of features of sample i, and by \(x_j\) the different features (we omit the sample index if no confusion is possible). If \(H(S|x_{j})\) are the children entropies after the split by feature \(x_j\), the Gain is

where the \(S_j\) are the subsets of S as a consequence of the split using feature (or attribute) \(x_j, p_j\) is the subset probability (number of samples \(s_i\) in subset \(S_j\) divided by the number of samples in the compete set S), and

where H(S) is the information entropy of set S for the possible labels \(y_j,j=1,\ldots ,l\), so Eq. (1.13) results in

Example of determination of the feature with most information gain. If we choose feature \(x_1\) for sorting, we find two subsets, \(S_1=\{s_i\text { such that } x_1=1\}=\{s_2,s_3,s_4\}\) and \(S_2=\{s_i\text { s.t. }x_1=0\}=\{s_1\}\). In \(S_1\) there are two elements (\(s_3,s_4\)) with label A, and one element (\(s_2\)) with label B, so the probabilities are 2/3 for label A and 1/3 for label B. In \(S_2\) the only element (\(s_1\)) has label B, so the probabilities are 0/1 for label A and 1/1 for label B. The entropy of subset \(S_1\) is \(H(S_1)=-\tfrac{2}{3}\log _2\tfrac{2}{3}-\tfrac{1}{3}\log _2\tfrac{1}{3}=0.92\). The entropy of subset \(S_2\) is \(H(S_2)=-0-\tfrac{1}{1}\log _2\tfrac{1}{1}=0\). In a similar form, since there are a total of 4 samples, two with label A and two with label B, the parent entropy is \(H(S)=-\tfrac{2}{4}\log _2\tfrac{2}{4}-\tfrac{2}{4}\log _2\tfrac{2}{4}=1\). Then, the information gain is \(G=H(S)-p(S_1)H(S_1)-p(S_2)H(S_2)=1-\tfrac{3}{4}0.92-\tfrac{1}{4}0=0.31\), where \(p(S_i)\) is the probability of a sample being in subset \(S_i\), i.e. 3/4 for \(S_1\) and 1/4 for \(S_2\). Repeating the computations for the other two features, it is seen that feature \(x_3\) is the one that has the best information gain. Indeed the information gain is \(G=1\) because it fully separates the samples according to the labels

The gain G is computed for each feature \(x_j\) (e.g., windows, structure type, building age, and soil type). The feature that maximizes the Gain is the one selected to generate the next level of leaves. The decision tree building process ends when the entropy reaches zero (the samples are perfectly classified). Figure 1.5 shows a simple example with four samples \(s_i\) in the dataset, each with three features \(x_j\) of two possible values (0 and 1), and one label y of two possible values (A and B). The best of the three features is selected as that one which provides the most information gain. It is seen that feature 1 produces some information gain because after the split using this feature, the samples are better classified according to the label. Feature 2 gives no gain because it is useless to distinguish the samples according to the label (it is in 50% each), and feature 3 is the best one because it fully classifies the samples according to the label (A is equivalent to \(x_3=1\), and B is equivalent to \(x_3=0\)). As for the Cuthill–McKee renumbering algorithm, there is no proof of reaching the optimum.

While DT are typically used for classification, there are regression trees in which the output is a real number. Other decision tree algorithms are the C4.5 (using continuous attributes), Classification And Regresssion Tree (CART), and Multivariate Adaptive Regression Spline (MARS) schemes.

1.2.2.4 Support Vector Machines (SVM), k-Means, and k-Nearest Neighbors (kNN)

The Support Vector Machine (SVM) is a technique which tries to find the optimal hyperplane separating groups of samples for clustering (unsupervised) or classification (supervised). Consider the function \(z(\textbf{x})=\textbf{w}^T \textbf{x}+b\) for classification. The model is

where the parameters \(\{\textbf{w},b\}\) are obtained by minimizing \(\tfrac{1}{2}|\textbf{w}|^2\) (or equivalently \(|\textbf{w}|^2\) or \(|\textbf{w}|\)) subject to \(y_i(\textbf{w}^T \textbf{x}_i+b)\ge 1 \;\;\forall i\) such that the decision boundary \(f(\textbf{x})=0\) given by the hyperplane has maximum distance to the groups of the samples; see Fig. 1.6. The minimization problem (in primal form) using Lagrange multipliers \(\alpha _i \) is

or in penalty form

A measure of certainty for sample i is based on its proximity to the boundary; i.e. \((\textbf{w}^T \textbf{x}_i+b)/|\textbf{w}|\) (the larger the distance to the boundary, the more certain the classification of the sample). Of course, SVMs may be used for multiclass classification, e.g., using the One-to-Rest approach (employing k SVMs to classify k classes) or the One-to-One approach (employing \(\tfrac{1}{2} k(k-1)\) SVMs to classify k classes); see Fig. 1.6.

Taking the derivative of the Lagrangian in squared brackets in Eq. (1.17) with respect to \(\textbf{w}\) and b, we get that at the minimum

and substituting it in the primal form given in Eq. (1.17), the minimization problem may be written in its dual form

and with \(b=y_j-\textbf{w}^T\textbf{x}_j\) being \(\textbf{x}_j\) any active (support) vector, with \(\alpha _j>0\). Then, \(z=\textbf{w}^T\textbf{x}+b\) is \(z=\sum _i\alpha _iy_i\textbf{x}_i^T\textbf{x}+b\). Instead of searching for the weights \(w_i, i=1,\ldots ,N\) (N is the number of features of each sample), we search for the coefficients \(\alpha _i,i=1,\ldots ,n\) (n is the number of samples).

Nonlinear separable cases may be addressed through different techniques as using positive slack variables \(\xi _i\ge 0\) or kernels. When using slack variables (Soft Margin SVM), for each sample i we write \(y_i (\textbf{w}^T \textbf{x}_i+b)\ge 1-\xi _i\) and we apply a L1 (LASSO) regularization by minimizing \(\tfrac{1}{2} |\textbf{w}|^2 + C \sum _i \xi _i\) subject to the constraints \(y_i(\textbf{w}^T \textbf{x}_i + b)\ge 1-\xi _i\) and \(\xi _i\ge 0\), where C is the penalization parameter. In this case, the only change in the dual formulation is the constraint for the Lagrange multipliers: \(C\ge \alpha _i\ge 0\), as it can be easily verified.

When using kernels, the kernel trick is typically employed. The idea behind the use of kernels is that if data is linearly non-separable in the features space, it may be separable in a larger space; see, for example, Fig. 1.7. This technique uses the dual form of the SVM optimization problem. Using the dual form

the equations only involve inner products of feature vectors of the type \((\textbf{x}_i^T \textbf{x}_j)\), ideal for using a kernel trick. For example, the case shown in Fig. 1.8 is not linearly separable in the original features space, but using the mapping \(\mathbf {\phi }(\textbf{x}):=\left[ x_1^2,x_2^2,\sqrt{2} x_1 x_2 \right] ^T\) to an augmented space, we find that the samples are linearly separable in this space. Then, for performing the linear separation in the transformed space, we have to compute z in that transformed space (Representer Theorem, Schölkopf et al. 2001)

to substitute the inner products in the original space by inner products in the transformed space. These operations (transformations plus inner products in the high-dimensional space) can be expensive (in complex cases we need to add many dimensions). However, in our example we note that

so it is not necessary to use the transformed space because the inner product can be equally calculated in both spaces. Indeed note that, remarkably, we even do not need to know explicitly \(\mathbf {\phi }(\textbf{x})\), because the kernel \(K(\textbf{a},\textbf{b})= (\textbf{a}^T\textbf{b})^2\) is fully written in the original space and we never need \(\mathbf {\phi }(\textbf{x})\). Then we just solve

Examples of kernel functions are the polynomial \(K(\textbf{a},\textbf{b})=(\textbf{a}^T \textbf{b} + 1)^d\), where d is the degree, and the Gaussian radial basis function (which may be interpreted as a polynomial form with infinite terms)

where \(\gamma =1/(2\sigma ^2 )>0 \).

Considering clustering, another algorithm similar in nature to SVM is used and is called the k-means (unsupervised technique creating k clusters). The idea here is to employ a distance measurement in order to determine the optimal center of clusters and the optimal decision boundaries between clusters. The case \(k=1\) is essentially the Voronoi diagram. Another simple approach is the k-Nearest Neighbors (k-NN), a supervised technique also employed in classification. This technique uses the labels of the k-nearest neighbors to predict the label of the target points (e.g., by some weighting method).

1.2.2.5 Dimensionality Reduction Algorithms

When problems have too many features (or data, measurements), dimensionality reduction techniques are employed to reduce the number of attributes and gain insight into the most meaningful ones. These are typically employed not only in pattern recognition and image processing (e.g., identification or compression) but also to determine which features, data, or variables are most relevant for the learning purpose. In essence, the algorithms are similar in nature to determining the principal modes in a dynamic response, because with that information, relevant mechanical properties (mass distribution, stiffness, damping), and the overall response may be obtained. In ML, classical approaches are given by Principal Component Analysis (PCA) based on Pearson’s Correlation Matrix (Abdi and Williams 2010; Bro and Smilde 2014), Singular Value Decomposition (SVD), Proper Orthogonal Decomposition (POD) (Berkooz et al. 1993), Linear (Fisher’s) Discriminant Analysis (LDA) (Balakrishnama and Ganapathiraju 1998; Fisher 1936), Kernel (Nonlinear) Principal Component Analysis (kPCA), Hofmann et al. (2008), Alvarez et al. (2012), Local Linear Embedding (LLE) (Roweis and Saul 2000; Hou et al. 2009), Manifold Learning (used also in constitutive modeling) (Cayton 2005; Bengio et al. 2013; Turaga et al. 2020), Uniform Manifold Approximation and Projection (UMAP) (McInnes et al. 2018), and autoencoders (Bank et al. 2020; Zhuang et al. 2021; Xu and Duraisamy 2020; Bukka et al. 2020; Simpson et al. 2021). Often, these approaches are also used in clustering.

LLE is one of the simplest nonlinear dimension reduction processes. The idea is to identify a global space with smaller dimension that reproduces the proximity of data in the higher dimensional space; it is a k-NN approach. First, we determine the weights \(w_{ij}\), such that \(\sum w_{ij}=1\), which minimize the error

in the representation of a point from the local space given by the k-nearest points (k is a user-prescribed hyperparameter), so

Then, we search for the images \(\textbf{y}_i\) of \(\textbf{x}_i\) in the lower dimensional space, simply by considering that the computed \(w_{ij}\) reflect the geometric properties of the local manifold and are invariant to translations and rotations. Given \(w_{ij}\), we now look for the lower dimensional coordinates \(\textbf{y}_i\) that minimize the cost function

a Principal Component Analysis. The principal components are those with largest variations (largest eigenvalues of the variances matrix). b Linear Discriminant Analysis to separate clusters. It is seen that feature \(x_1\) is not a good choice to determine if a sample belongs to a given cluster, but there is a features combination (a line) which gives the best discrimination between clusters. That combination maximizes the distance between means of clusters whereas minimizes the dispersion of samples within the clusters

Isometric Mapping (ISOMAP) techniques are similar, but use geodesic k-node-to-k-node distances (computed by Dijkstra’s 1959 or the Floyd–Warshall 1962 algorithms to find the shortest path between nodes) and look for preserving them in the reduced space. Another similar technique is the Laplacian eigenmaps scheme (Belkin and Niyogi 2003), based on the non-singular lowest eigenvectors of the Graph Laplacian \(\textbf{L}=\textbf{d}-\textbf{w}\), where \(d_{ii}=\sum _j w_{ij}\) gives the diagonal degree matrix and \(w_{ij}\) are the edge weights, computed for example using the Gaussian kernel \(w_{ij}=K(\textbf{x}_i,\textbf{x}_j )=\exp (-|\textbf{x}_i-\textbf{x}_j|^2 /(2\sigma ^2 ))\). Within the same k-neighbors family, yet more complex and advanced, are Topological Data Analysis (TDA) techniques. A valuable overview may be found in Chazal and Michel (2021); see also the references therein.

For the case of PCA, it is typical to use the covariance matrix

where the overbar denotes the mean value of the feature, and \(x_{j(i)}\) is feature j of sample i. The eigenvectors and eigenvalues of the covariance matrix are the principal components (directions/values of maximum significance/relevance), and the number of them selected as sufficient is determined by the variance ratios; see Fig. 1.9(a). PCA is a linear unsupervised technique. The typical implementation uses mean-corrected samples, as in kPCA, so in such case \(S_{jk}=\frac{1}{n} \sum _{i=1}^n x_{j(i)} x_{k(i)}\), or in matrix notation \(\textbf{S}=\tfrac{1}{n}\textbf{X}\textbf{X}^T\). kPCA (Schölkopf et al. 1997) is PCA using kernels (such as polynomials, the hyperbolic tangent, or the Radial Basis Function (RBF)) to address the nonlinearity by expanding the space. For example, using the RBF, we construct the kernel matrix \(K_{ij}\), for which the components are obtained from the samples i, j as \(K_{ij}=\exp (-\gamma |\textbf{x}_i-\textbf{x}_j|^2)\) . The RBF is then centered in the transformed space by (note that being centered in the original features space does not mean that the features are also automatically centered in the transformed space, hence the need for this operation)

where \(\textbf{1}\) is a \(n\times n\) matrix of the unit entry “1”. Then \(\bar{\textbf{K}}(\textbf{x}_i,\textbf{x}_j)=\bar{\mathbf {\phi }}(\textbf{x}_i)^T \bar{\mathbf {\phi }}(x_j)\) with the centered \(\bar{\mathbf {\phi }}(\textbf{x}_i)={\mathbf {\phi }}(\textbf{x}_i)-1/n \sum _{r=1}^n {\mathbf {\phi }}(\textbf{x}_r)\). The larger eigenvalues are the principal components in the transformed space, and the corresponding eigenvectors are the samples already projected onto the principal axes.

In contrast to PCA, the purpose of LDA is typically to improve separability of known classes (a supervised technique), and hence maximize information in this sense: maximizing the distance between the mean values of the classes and, within each class, minimizing the variation. It does so through the eigenvalues of the normalized between-classes scatter matrix \(\textbf{S}_w^{-1} \textbf{S}_b\) (the between-variances by the within-variances) where

and \(\bar{\textbf{x}}\) is the overall mean vector of the features \(\textbf{x}\) and \(\textbf{m}_i\) is the mean vector of those within-class i. If \(\bar{\textbf{x}}=\textbf{m}_i\) the class is not separable from the selected features. Frequently used nonlinear extensions of LDA are the Quadratic Discriminant Analysis (QDA) (Tharwat 2016; Ghosh et al. 2021), Flexible Discriminant Analysis (FDA) (Hastie et al. 1994), and Regularized Discriminant Analysis (RDA) (Friedman 1989).

Proper Orthogonal Decompositions (POD) are frequently motivated in PCA and are often used in turbulence and in reducing dynamical systems. It is a technique also similar to classical modal decomposition. The idea is to decompose the time-dependent solution as

and compute the Proper Orthogonal Modes (POMs) \(\mathbf {\varphi }_p(\textbf{x})\) that maximize the energy representation (L2-norm). In essence, we are looking for the set of “discrete functions” \(\mathbf {\varphi }_p(\textbf{x})\) that best represent \(\textbf{u}(\textbf{x},t)\) with the lowest number of terms P. Since these are computed as discretized functions, several snapshots \(\textbf{u}(\textbf{x},t_i), i=1, \ldots , n\) are grabbed in the discretized domain, i.e.

Then, the POD vectors are the eigenvectors of the sample covariance matrix. If the snapshots are corrected to have zero mean value, the covariance matrix is

The POMs may also be computed using the SVD of \(\textbf{U}\) (the left singular vectors are the eigenvectors of \(\textbf{U}\textbf{U}^T\)) or auto-associative NNs (Autoencoder Neural Networks that replicate the input in the output but using a hidden layer of smaller dimension). To overcome the curse of dimensionality when using too many features (e.g., for parametric analyses), the POD idea is generalized in Proper Generalized Decomposition (PGD), by assuming approximations of the form

where \(\mathbf {\phi }_i^j (x_j)\) are the unknown vector functions (usually also discretized and computed iteratively, for example using a greedy algorithm), and “\(\circ \)” stands for the Hadamard or entry-wise product of vectors. Note that, in general we cannot use the separability \(\Phi (x,y)\ne \phi (x)\psi (y)\) but PGDs look for the best \(\phi _i(x)\psi _i(y)\) choices for the given problem such that we can say \(\Phi (x,y)\simeq \sum _i \phi _i (x)\psi _i(y)\) in a sufficiently small number of addends (hence with a reduced complexity). The power of the idea is that for a large number n of features, determining functions of the type \(\Phi (x_1,x_2,\ldots , x_n)\) is virtually impossible, but determining products and additions of scalar functions is feasible.

The UMAP and t-SNE schemes are based on the concept of a generalized metric or distance between samples. A symmetric and normalized (between 0 and 1) metric is defined as

where the unidirectional distance function is defined as

where \(\rho _{ij} =|\textbf{x}_i-\textbf{x}_j|\) and \(\rho _i^k=|\textbf{x}_i-\textbf{x}_k |\), with k referring to the k-nearest neighbor (\(\rho _i^1\) refers to the nearest neighbor to i). Here k is an important hyperparameter. Note that \(d_i^j=1\) if i, j are nearest neighbors, and \(d_i^j\rightarrow 0\) for far away neighbors. We are looking for a new set of lower dimensional features \(\textbf{z}\) to replace \(\textbf{x}\). The same generalized distance \(d_{ij}(\textbf{z}_i,\textbf{z}_j)\) may be applied to the new features. To this end, through optimization techniques, like the steepest descent, we minimize the fuzzy set dissimilarity cross-entropy (or entropy difference) like the Kullback–Leibler (KL) divergence (Hershey and Olsen 2007; Van Erven and Harremos 2014), which measures the difference between the probability distributions \(d_{ij}(\textbf{x}_i,\textbf{x}_j )\) and \(d_{ij}(\textbf{z}_i,\textbf{z}_j )\), and their complementary values \([1-d_{ij}(\textbf{x}_i,\textbf{x}_j )]\) and \([1-d_{ij}(\textbf{z}_i,\textbf{z}_j )]\) (recall that \(d\in (0,1]\), so it is seen as a probability distribution)

Note that the KL scheme is not symmetric with respect to the distributions. If distances in both spaces are equal for all the samples, KL \(=0\). In general, a lower dimensional space gives KL \(\ne 0\), but with the dimension of \(\textbf{z}\) fixed, the features (or combinations of features) that give a minimum KL considering all n samples represent the optimal selection.

Autoencoders are a type of neural network, discussed below, and can be interpreted as a nonlinear generalization of PCA. Indeed, an autoencoder with linear activation functions is equivalent to a SVD.

1.2.2.6 Genetic Algorithms

Genetic Algorithms (Mitchell 1998) in ML (or more generally evolutionary algorithms) are in essence very similar to those employed in optimization (Grefenstette 1993; De Jong 1988). They are metaheuristic algorithms which include the steps in natural evolution: (1) Initial population, (2) a fitness function, (3) a (nature-like) selection according to fitness, (4) crossover (the gene combination), (5) mutation (random alteration). After running many generations, convergence is expected to the superspecies. Feature selection and database reduction is a typical application (Vafaie and De Jong 1992). The variety of implementations is large and the implementations depend on the specific problem addressed (e.g., polymer design, Kim et al. 2021, and materials modeling, Paszkowicz 2009), but the essence and ingredients are similar.

1.2.2.7 Rosenblatt’s Perceptron, Adaline (Adaptive Linear Neuron) Model, and Feed Forward Neural Networks (FFNN)

Currently, the majority of ML algorithms employed in practice are some type or variation of Neural Networks. Deep Learning (DL) refers to NNs with many layers. While the NN theory was proposed some decades ago, efficient implementations facilitating the solution of real-world problems have been established only in the late 1980s and early 1990s. NNs are based on the ideas from McCulloch and Pitts (1943) describing a simple model for the work of neurons, and on Rosenblatt’s perceptron (Rosenblatt 1958); see Fig. 1.10. The Adaline model (Widrow and Hoff 1962) (Fig. 1.10) introduces the activation function to drive the learning process from the different samples, instead of the dichotomic outputs from the samples. This activation function is today one of the keystones of NNs. The logistic sigmoid function is frequently used. There are other alternatives such as the ReLU (Rectified Linear Unit; the Macaulay ramp function) or the hyperbolic tangent

NNs are made from many such artificial neurons, typically arranged in several layers, with each layer \(l=1,\ldots ,L\) containing many neurons. The output from the network is defined as a composition of functions

where the \(\textbf{f}^l\) are the neuron functions of the layer (often also denoted by \(\mathbf {\sigma }^l\) in the sigmoid case), typically arranged by groups in the form \(\textbf{f}^l(\textbf{W}^l \textbf{x}^l+\textbf{b}_l )\), where \(\textbf{W}^l\) is the matrix of weights, \(\textbf{z}^l:=\textbf{W}^l \textbf{x}^l+\textbf{b}_l\), \(\textbf{x}^l=\textbf{y}^{l-1}=\textbf{f}^{l-1}(\textbf{z}^{l-1})\) are the neuron inputs and output of the previous layer (the features for the first function; \(\textbf{y}^0\equiv \textbf{x}\)), and \(\textbf{b}_l\) is the layer bias vector, which is often incorporated as a weight on a unit bias by writing \(\textbf{z}^l=\textbf{W}^l \textbf{x}^l\), so \(\textbf{x}^l\) has also the index 0, and \(x_0^l=1\); see Fig. 1.11. The output may be also a vector \(\textbf{y}\equiv \textbf{y}^L\). The purpose of the learning process is to learn the optimum values of \(\textbf{W}^l\), \(\textbf{b}_l\). The power of the NNs is that a simple architecture, with simple functions, may be capable of reproducing more complex functions. Indeed, Rosenblatt’s scheme discussed below may give any linear or nonlinear function. Of course, complex problems will require many neurons, layers, and data, but the overall structure of the method is almost unchanged.

The Feed Forward Neural Network (FFNN) with many layers, as shown in Fig. 1.11, is trained by optimization algorithms (typically modifications of the steepest descent) using the backpropagation algorithm, which consists in computing the sensitivities using the chain rule from the output layer to the input layer, so for each layer, the information of the derivatives of the subsequent layers are known. For example, in Fig. 1.11, assume that the error is computed as \(E=\tfrac{1}{2} \left( \textbf{y}-\textbf{y}^{\text {exp}}\right) ^T \left( \textbf{y}-\textbf{y}^{\text {exp}}\right) \) (logistic errors are more common, but we consider herein this simpler case). Then, if \(\alpha \) is the learning rate (a hyperparameter), the increment between epochs of the parameters is

where \(\partial \textbf{y}/ \partial W_{oi}^l\) is computed through the chain rule. Figure 1.12 shows a simple example with two layers and two neurons per layer; superindices refer to layer and subindices to neuron. For example, following the green path, we can compute

where \(\partial {y}_2 / \partial {z}_2^2\) is the derivative of the selected activation function evaluated at the iterative value \({z}_2^2\) and \(\partial {z}_2^2 /\partial W_{21}^2={x}_1^2\) is also the known iterative value. As an example of a deeper layer, consider the red line in Fig. 1.12

where we note that the first square bracket corresponds to the last layer, the second to the previous one, and so on, until the term in curly brackets addressing the specific network variable. The procedures had issues with exploding or vanishing gradients (especially with sigmoid and hyperbolic tangent activations), but several improvements in algorithms (gradient clipping, regularization, skipping of connections, etc.), have resulted in efficient algorithms for many hidden layers. The complex improvement techniques, with an important number of “tweaks” to make them work in practical problems, is one of the reasons why “canned” libraries are employed and recommended (Fig. 1.13).

Neural networks are capable of generating functions to fit data regardless of the dimension of the space and the nonlinearity of the problem. In this example, three neurons of the simplest Rosenblatt’s perceptron consisting of a step function are used to generate a local linear function. This function is obtained by simply changing the weights of the bias and adding the results of the three neurons with equal weights. Other more complex functions may be obtained with different weights. Furthermore, the firing step function may be changed by generally better choices as the ReLU or the sigmoid functions

1.2.2.8 Bayesian Neural Networks (BNN)

A Bayesian Neural Network (BNN) is a NN that uses probability and the Bayes theorem relating conditional probabilities

where \(p(\textbf{x}|\textbf{z})=p(\textbf{x}\cap \textbf{z})/p(\textbf{z})\). A typical example is to consider a probabilistic distribution of the weights (so we take \(\textbf{z}=\textbf{w}\)) for a given model, or a probabilistic distribution of the output (so we take \(\textbf{z}=\textbf{y}\)) not conditioned to a specific model. These choices can be applied in uncertainty quantifications (Olivier et al. 2021), with metal fatigue a typical application case (Fernández et al. 2022a; Bezazi et al. 2007). Given the complexity in passing analytical distributions through the NN, sampling is often performed through Monte Carlo approaches. The purpose is to learn the mean and standard deviations of the distributions of the weights, assuming they follow a normal distribution \(w_i\approx \mathcal {N}({\mu }_i,{\sigma }_i^2)\). For the case of predicting an output y, considering one weight, the training objective is to maximize the probability of the training data for the best prediction, or minimize the likelihood of a bad prediction as

where \(\text {KL}(p_1,p_2)\) is the Kullback–Leibler divergence regularization for the probabilities \(p_1\) and \(p_2\) explained before, \(\mathcal {L}\) is the loss function and \(f(\textbf{x}_i;\mathcal {N}(\mathbf {\mu },\mathbf {\Sigma }))\) is the function prediction for y from data \(\textbf{x}_i\), assuming a distribution \(\mathcal {N}(\mathbf {\mu },\mathbf {\Sigma })\). With the learned optimal parameters \(\mathbf {\mu }^*,\mathbf {\Sigma }^*\), the prediction for new data \(\textbf{x}\) is

where the \(\mathcal {N}_k(\mathbf {\mu }^*,\mathbf {\Sigma }^*)\) are the numerical evaluations of the normal distributions for the obtained parameters.

1.2.2.9 Convolutional Neural Networks (CNNs)

Although a Convolutional Neural Network (CNN) is a type of FFNN, they were formulated with the purpose of classifying images. CNNs combine one or several convolution layers combined with pooling layers (for feature extraction from images) and with normal final FFNN layers for classification (Fig. 1.14). Pooling is also named subsampling since performing averaging or extracting the maximum of a patch are the typical operations. In the convolutional layers, input data has usually several dimensions, and they are filtered with a moving patch array (also named kernel, with a specific stride length and edge padding; see Fig. 1.15) to reduce the dimension and/or to extract the main characteristics of, or information from, the image (like looking at a lower resolution version or comparing patterns with a reference). Each padding using a patch over the same record is called a channel, and successive or chained paddings are called layers, Fig. 1.15. The same padding, with lower dimension, may be applied over different sample dimensions (a volume). In essence, the idea is similar to the convolution of functions in signal processing to extract information from the signal. Indeed this is also an application of CNN. The structure of CNNs have obvious and interesting applications in multiscale modeling in materials science, and in constitutive modeling (Yang et al. 2020; Frankel et al. 2022), and thus also in determining material properties (Xie and Grossman 2018; Zheng et al. 2020), behavior prediction (Yang et al. 2020), and obviously in extracting microstructure information from images (Jha et al. 2018).

Convolutional network layer with depth 1, stride length 2 (the filter patch moves 2 positions at once) and edge padding 1 (the boundary is filled with one row and column of zeroes). Pooling is similar, but usually selects the maximum or average of a moving pad to avoid correlation of features with location

1.2.2.10 Recurrent Neural Networks (RNN)

RNNs are used for sequences of events, so they are extensively used in language processing (e.g., in “Siri” or translators from Google), and they are effective in unveiling and predicting sequences of events (e.g., manufacturing) or when history is important (path-dependent events as in plasticity Mozaffar et al. 2019; Li et al. 2019; du Bos et al. 2020). In Fig. 1.16, a simple RNN is shown with \(\,^{t}\textbf{h}\) representing the history variables, such that the equations of the RNN are

The unfolding of a RNN allows for a better understanding of the process; see Fig. 1.16. Following our previous seismic example, they can be used to study the prediction of new earthquakes from previous history; see, for example, Panakkat and Adeli (2009), Wang et al. (2017). A RNN is similar in nature to a FFNN, and is frequently mixed with FF layers, but recycling some output at a given time or event for the next time(s) or event(s). RNNs may be classified according to the number of related input–output instances as one-to-one, one-to-many (one input instance to many output instances), many-to-one (e.g., classifying a voice or determining the location of an earthquake), and many-to-many (translation into a foreign language); see Fig. 1.16. A frequent ingredient in RNN are “gates” (e.g., in Long Short-Term Memory (LSTM), see Fig. 1.17) to decide which data is introduced, output, or forgotten.

1.2.2.11 Generative Adversarial Networks (GAN)

A Generative Adversarial Network (GAN) (Goodfellow et al. 2020) is a type of ML based on game theory (sum-zero game where one agent’s benefit is the other agent’s loss) with the purpose to learn the probability distribution of the set of training samples (i.e. to solve the generative modeling problem). Although different algorithms have been presented within the GAN paradigm, most are based on NN agents, consisting of a generative NN and a discriminative NN. These NNs have opposite targets. The generative NN tries to fool the discriminative NN, whereas the discriminative NN tries to distinguish original (true) data from generated data presented by the generative NN. With successive events, both NNs learn—the generative NN learns how to fool the other NN, and the discriminative NN how not to be fooled. The type of NN depends on the problem at hand. For example when distinguishing images, a CNN is typically used. In this case, for example, in the falsification of photographs (deepfake Yadav and Salmani 2019), several images of a person are presented and the discriminator has to distinguish if they are actual pictures or manufactured photos. This technology is used to generate fake videos, and to detect them (Duarte et al. 2019; Yu et al. 2022) and is used in CAE tasks like the reconstruction of turbulent velocity fields (by comparing images) (Deng et al. 2019). GANs are also used in the generation of compliant designs, for example in the aeronautical industry (Shu et al. 2020), and also to solve differential equations (Yang et al. 2020; Randle et al. 2020). A recent overview of GANs may be found in Aggarwal et al. (2021).

1.2.2.12 Ensemble Learning

While NNs may bring accurate predictions through extensive training, obtaining such predictions may not be computationally efficient. Ensemble learning consists of employing many low-accuracy but efficient methods to obtain a better prediction through a sort of averaging (or voting). Following our seismic vulnerability example, it would be like asking several experts to give a fast opinion (for example just showing them a photograph) about the vulnerability of a structure or a site, instead of asking one of them to perform a detailed study of the structure (Giacinto et al. 1997; Tang et al. 2022). The methods used may be, for example, shallow NNs and decision tress.

1.3 Constraining to, and Incorporating Physics in, Data-Driven Methods

ML usually gives no insight into the physics of the problem. The classical procedures are considered “black boxes”, with inherent positive (McCoy et al. 2022) and negative (Gabel et al. 2014) attributes. While these black boxes are useful in applications to solve classical fuzzy problems where they have been extensively applied in economy, image or speech recognition, pattern recognition, etc. they have inherently several drawbacks regarding use in mechanical engineering and applied sciences. The first drawback is the large amount of data they require to yield relevant predictions. The second one is the lack of fulfillment of basic physics principles (e.g., the laws of thermodynamics). The third one is the lack of guarantees in the optimality or uniqueness of the prediction, or even guarantees in the reasonableness of the predicted response. The fourth one is the computational cost, if including training, when compared using classical methods. Although once trained, the use may be much faster than many classical methods. Probably, the most important drawback is the lack of physical insight into the problem, because human learning is complex and needs a detailed understanding of the problem to seek creative solutions to unsolved problems. Indeed, in contrast to “unexplainable” AI, now also eXplainable Artificial Intelligence (XAI) is being advocated (Arrieta et al. 2020).

ML may be a good avenue to obtain engineering solutions, but to yield valuable (and reliable), scientific answers, physics principles need to be incorporated in the overall procedure. To this end, the predictions and learning of the previously overviewed methods, or other more elaborated ones, should be restricted to solution subsets that do fulfill all the basic principles. That is, conservation of energy, of linear momentum, etc. should be fulfilled. When doing so, we use data-driven physics-based machine learning (or modeling) (Ströfer et al. 2018), or “gray-box” modeling (Liu et al. 2021; Asgari et al. 2021; Regazzoni et al. 2020; Rogers et al. 2017). The simplest and probably most used method to impose such principles (an imposition called “whitening” or “bleaching” Yáñez-Márquez 2020) is the use of penalties and Lagrange multipliers in the cost function (Dener et al. 2020; Borkowski et al. 2022; Rao et al. 2021; Soize and Ghanem 2020), but there are many options and procedures to incorporate physics either in the data or in the learning (Karpatne et al. 2017). The resulting methods and disciplines which mix data science and physical equations are often referred to as Physics Based Data Science (PBDS), Physics-Informed Data Science (PIDS), Physics-Informed Machine Learning (PIML) (Karniadakis et al. 2021; Kashinath et al. 2021), Physics Guided Machine Learning (PGML) (Pawar et al. 2021; Rai and Sahu 2020), or Data-Based Physics-Informed Engineering (DBPIE).

In a nutshell, data-based physically informed ML allows for the use of data science methods without most of the shortcomings of physics-uninformed methods. Namely, we do not need much data (Karniadakis et al. 2021), solutions are often meaningful, the results are more interpretable, the methods much more efficient, and the number of meaningless spurious solutions is substantially smaller. The methods are no longer a sophisticated interpolation but can give predictions outside the domain given by the training data. In essence, we incorporate the knowledge acquired in the last centuries.

In PBDS, meaningful internal variables play a key role. In classical engineering modeling, as in constitutive modeling, variables are either external (position, velocity, and temperature) or internal (plastic or viscous deformations, damage, and deformation history). The external variables are observable (common to all methods), whereas the internal variables, being non-observable, are usually based on assumptions to describe some internal state. Here, a usual difference with ML methods is that a physical meaning is typically assigned to internal variables in classical methods, but for example when using NNs, internal variables (e.g., those in hidden layers) have typically no physical interpretation. However, the sought solution of the problem relates external variables both through physical principles or laws and through state equations. To link both physical principles and state equations, an inherent physical meaning is therefore best given (or sought) for the internal ML variables (Carleo et al. 2019; Vassallo et al. 2021). Physical principles are theoretical, of general validity, and unquestioned for the problem at hand (e.g., mass, energy, momentum conservation, and Clausius-Duhem inequality), whereas state equations are a result of assumptions and observations at the considered scales, leading to equations tied to some conditions, assumptions, and simplifications of sometimes questionable generality and of more phenomenological nature.

In essence, the possible ML solutions obtained from state equations must be restricted to those that fulfill the basic physical principles, constituting the physically viable solution manifold, and that is often facilitated by the proper selection of the structure of the ML method and the involved internal variables. These physical constraints may be incorporated in ML procedures in different ways, depending on the analysis and the ML method used, as we briefly discuss below (see also an example in Ayensa Jiménez 2022).

1.3.1 Incorporating Physics in, and Learning Physics From, the Dataset

An objective may be to discover a hidden physical structure in data or physical relations in data (Chinesta et al. 2020). One purpose may be to reduce the dimension of the problem by discovering relations in data that lead to the reduction of complexity (Alizadeh et al. 2020; Aletti et al. 2015). This is similar to calculating dynamical modes of displacements (Bathe and Wilson 1973; Bathe 2006) or to discover the invariants when relating strain components in hyperelasticity (Weiss et al. 1996; Bonet and Wood 1997). Another objective may be to generate surrogate models (Bird et al. 2021; Straus and Skogestad 2017; Jansson et al. 2003; Liu et al. 2021) to discover which variables have little relevance to the physical phenomenon, or quantifying uncertainty in data (Chan and Elsheikh 2018; Trinchero et al. 2018; Abdar et al. 2021; Zhu et al. 2019). Learning physics from data is in essence a data mining approach (Bock et al. 2019; Kamath 2001; Fischer et al. 2006). Of course, this approach is always followed in classical analysis when establishing analytical models, for example when neglecting time effects for quasi-stationary problems, or when reducing the dimension of 3D problems to plane stress or plane strain conditions. However, ML seeks an unbiased automatic approach to the solution of a problem.

1.3.2 Incorporating Physics in the Design of a ML Method

A natural possibility to incorporate physics in the design of the ML method is to impose some equations, in some general form, onto the method, and the purpose is to learn some of the freedom allowed by the equations (Tartakovsky et al. 2018). That is the case when learning material parameters (typical in Materials Science informatics, Agrawal and Choudhary 2016; Vivanco-Benavides et al. 2022; Stoll and Benner 2021), selecting specific functions from possibilities (e.g., selecting hardening models or hyperelastic models from a library of functions, Flaschel et al. 2021, 2022), or learning corrections of models (e.g., deviations of the “model” from reality).

Physics in the design of the ML procedure may also be incorporated by imposing some specific meaning to the hidden variables (introducing physically meaningful internal variables as the backstress in plasticity) or the structure (as the specific existence and dependencies of variables in the yield function) (Ling et al. 2016). Doing so, the resulting learned relations may be better interpreted and will be in compliance with our previous knowledge (Abueidda et al. 2021; Miyazawa et al. 2019; Zhan and Li 2021).

A large amount of ML methods in CAE are devoted to learning constitutive (or state) equations (Leygue et al. 2018), with known conservation principles and kinematic relations (equilibrium and compatibility), as well as the boundary conditions (González et al. 2019b; He et al. 2021). In essence, we can think of a “physical manifold” and a “constitutive manifold”, and we seek the intersection of both for some given actions or boundary and initial conditions (Ibañez et al. 2018; He et al. 2021; Ibañez et al. 2017; Nguyen and Keip 2018; Leygue et al. 2018). Autoencoders are a good tool to reduce complexity and filter noise (He et al. 2021). Other methods are devoted to inferring the boundary conditions or material constitutive inhomogeneities (e.g., local damage) assuming that the general form of the constitutive relations is known (this is a ML approach to the classical inverse problem of damage/defect detection).

Data-based constitutive modeling. Left: purely data-driven technique, where no constitutive manifold is directly employed. Instead, the closest known data point is located and is used to compute the solution. Center, right: constitutive (e.g., stress–strain) data points are used to compute a constitutive manifold (which may include uncertainty quantification), which is then employed to compute the solution in a classical manner