From University of Washington (US)

July 15, 2021

Leila Gray

206.475.9809

leilag@uw.edu

New artificial intelligence software can compute protein structures in 10 minutes.

Scientists have waited months for access to high-accuracy protein structure prediction since DeepMind presented remarkable progress in this area at the 2020 Critical Assessment of Structure Prediction, or CASP14, conference. The wait is now over.

Researchers at the Institute for Protein Design at the University of Washington School of Medicine in Seattle have largely recreated the performance achieved by DeepMind on this important task. These results are published online today, July 15, by the journal Science.

Unlike DeepMind, the UW Medicine team has already made their method, dubbed RoseTTAFold, freely available. Scientists from around the world are now using it to build protein models to accelerate their own research. Soon after its recent upload, the program was downloaded from GitHub by over 140 independent research teams.

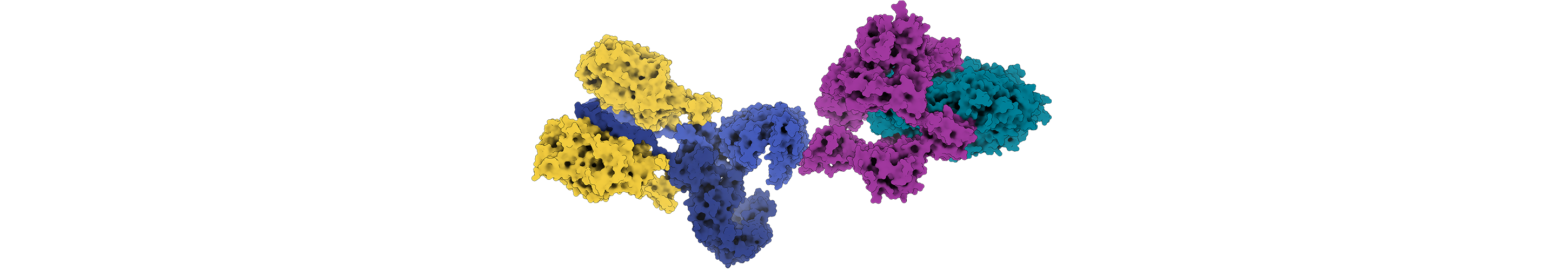

Researchers used artificial intelligence to generate hundreds of new protein structures, including this 3D view of human interleukin-12 bound to its receptor. Credit: Ian Haydon.

Proteins consist of strings of amino acids that fold up into intricate microscopic shapes.These unique shapes in turn give rise to nearly every chemical process inside living organisms. By better understanding protein shapes, scientists can speed up the development of new treatments for cancer, COVID-19, and thousands of other medical disorders.

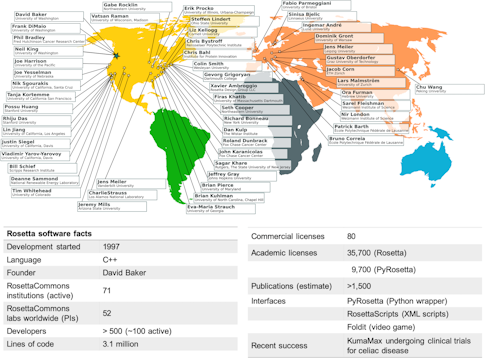

“It has been a busy year at the Institute for Protein Design, designing COVID-19 therapeutics and vaccines and launching these into clinical trials, along with developing RoseTTAFold for high accuracy protein structure prediction. I am delighted that the scientific community is already using the RoseTTAFold server to solve outstanding biological problems,” said senior author David Baker, Howard Hughes Medical Institute Investigator, professor of biochemistry, and director of the Institute for Protein Design at UW Medicine.

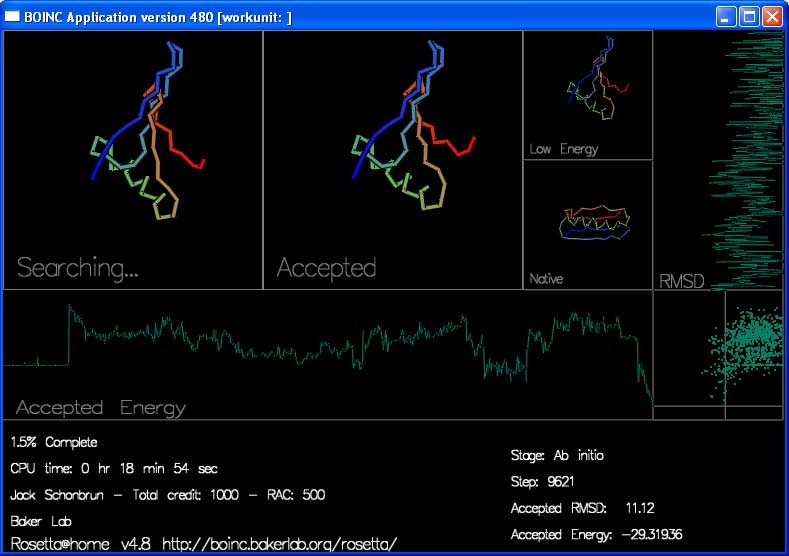

David Baker’s Rosetta@home project, a project running on BOINC software from UC Berkeley

David Baker’s Rosetta@home project, a project running on BOINC software from UC Berkeley

In the new study, a team of computational biologists led by Baker developed a software tool called RoseTTAFold that uses deep learning to quickly and accurately predict protein structures based on limited information. Without the aid of such software, it can take years of laboratory work to determine the structure of just one protein.

RoseTTAFold, on the other hand, can reliably compute a protein structure in as little as 10 minutes on a single gaming computer.The team used RoseTTAFold to compute hundreds of new protein structures, including many poorly understood proteins from the human genome. They also generated structures directly relevant to human health, including for proteins associated with problematic lipid metabolism, inflammation disorders, and cancer cell growth. And they show that RoseTTAFold can be used to build models of complex biological assemblies in a fraction of the time previously required.

RoseTTAFold is a “three-track” neural network, meaning it simultaneously considers patterns in protein sequences, how a protein’s amino acids interact with one another, and a protein’s possible three-dimensional structure. In this architecture, one-, two-, and three-dimensional information flows back and forth, allowing the network to collectively reason about the relationship between a protein’s chemical parts and its

folded structure.

“We hope this new tool will continue to benefit the entire research community,” said

Minkyung Baek, a postdoctoral scholar who led the project in the Baker laboratory at UW Medicine.

This work was supported in part by Microsoft, Open Philanthropy Project, Schmidt

Futures, Washington Research Foundation, National Science Foundation, Wellcome

Trust, and the National Institute of Health. A full list of supporters is available in the Science paper.

See the full article here .

five-ways-keep-your-child-safe-school-shootings

Please help promote STEM in your local schools.

The University of Washington (US) is one of the world’s preeminent public universities. Our impact on individuals, on our region, and on the world is profound — whether we are launching young people into a boundless future or confronting the grand challenges of our time through undaunted research and scholarship. Ranked number 10 in the world in Shanghai Jiao Tong University rankings and educating more than 54,000 students annually, our students and faculty work together to turn ideas into impact and in the process transform lives and our world. For more about our impact on the world, every day.

So what defines us —the students, faculty and community members at the University of Washington? Above all, it’s our belief in possibility and our unshakable optimism. It’s a connection to others, both near and far. It’s a hunger that pushes us to tackle challenges and pursue progress. It’s the conviction that together we can create a world of good. Join us on the journey.

The University of Washington (US) is a public research university in Seattle, Washington, United States. Founded in 1861, University of Washington is one of the oldest universities on the West Coast; it was established in downtown Seattle approximately a decade after the city’s founding to aid its economic development. Today, the university’s 703-acre main Seattle campus is in the University District above the Montlake Cut, within the urban Puget Sound region of the Pacific Northwest. The university has additional campuses in Tacoma and Bothell. Overall, University of Washington encompasses over 500 buildings and over 20 million gross square footage of space, including one of the largest library systems in the world with more than 26 university libraries, as well as the UW Tower, lecture halls, art centers, museums, laboratories, stadiums, and conference centers. The university offers bachelor’s, master’s, and doctoral degrees through 140 departments in various colleges and schools, sees a total student enrollment of roughly 46,000 annually, and functions on a quarter system.

University of Washington is a member of the Association of American Universities(US) and is classified among “R1: Doctoral Universities – Very high research activity”. According to the National Science Foundation(US), UW spent $1.41 billion on research and development in 2018, ranking it 5th in the nation. As the flagship institution of the six public universities in Washington state, it is known for its medical, engineering and scientific research as well as its highly competitive computer science and engineering programs. Additionally, University of Washington continues to benefit from its deep historic ties and major collaborations with numerous technology giants in the region, such as Amazon, Boeing, Nintendo, and particularly Microsoft. Paul G. Allen, Bill Gates and others spent significant time at Washington computer labs for a startup venture before founding Microsoft and other ventures. The University of Washington’s 22 varsity sports teams are also highly competitive, competing as the Huskies in the Pac-12 Conference of the NCAA Division I, representing the United States at the Olympic Games, and other major competitions.

The university has been affiliated with many notable alumni and faculty, including 21 Nobel Prize laureates and numerous Pulitzer Prize winners, Fulbright Scholars, Rhodes Scholars and Marshall Scholars.

In 1854, territorial governor Isaac Stevens recommended the establishment of a university in the Washington Territory. Prominent Seattle-area residents, including Methodist preacher Daniel Bagley, saw this as a chance to add to the city’s potential and prestige. Bagley learned of a law that allowed United States territories to sell land to raise money in support of public schools. At the time, Arthur A. Denny, one of the founders of Seattle and a member of the territorial legislature, aimed to increase the city’s importance by moving the territory’s capital from Olympia to Seattle. However, Bagley eventually convinced Denny that the establishment of a university would assist more in the development of Seattle’s economy. Two universities were initially chartered, but later the decision was repealed in favor of a single university in Lewis County provided that locally donated land was available. When no site emerged, Denny successfully petitioned the legislature to reconsider Seattle as a location in 1858.

In 1861, scouting began for an appropriate 10 acres (4 ha) site in Seattle to serve as a new university campus. Arthur and Mary Denny donated eight acres, while fellow pioneers Edward Lander, and Charlie and Mary Terry, donated two acres on Denny’s Knoll in downtown Seattle. More specifically, this tract was bounded by 4th Avenue to the west, 6th Avenue to the east, Union Street to the north, and Seneca Streets to the south.

John Pike, for whom Pike Street is named, was the university’s architect and builder. It was opened on November 4, 1861, as the Territorial University of Washington. The legislature passed articles incorporating the University, and establishing its Board of Regents in 1862. The school initially struggled, closing three times: in 1863 for low enrollment, and again in 1867 and 1876 due to funds shortage. University of Washington awarded its first graduate Clara Antoinette McCarty Wilt in 1876, with a bachelor’s degree in science.

19th century relocation

By the time Washington state entered the Union in 1889, both Seattle and the University had grown substantially. University of Washington’s total undergraduate enrollment increased from 30 to nearly 300 students, and the campus’s relative isolation in downtown Seattle faced encroaching development. A special legislative committee, headed by University of Washington graduate Edmond Meany, was created to find a new campus to better serve the growing student population and faculty. The committee eventually selected a site on the northeast of downtown Seattle called Union Bay, which was the land of the Duwamish, and the legislature appropriated funds for its purchase and construction. In 1895, the University relocated to the new campus by moving into the newly built Denny Hall. The University Regents tried and failed to sell the old campus, eventually settling with leasing the area. This would later become one of the University’s most valuable pieces of real estate in modern-day Seattle, generating millions in annual revenue with what is now called the Metropolitan Tract. The original Territorial University building was torn down in 1908, and its former site now houses the Fairmont Olympic Hotel.

The sole-surviving remnants of Washington’s first building are four 24-foot (7.3 m), white, hand-fluted cedar, Ionic columns. They were salvaged by Edmond S. Meany, one of the University’s first graduates and former head of its history department. Meany and his colleague, Dean Herbert T. Condon, dubbed the columns as “Loyalty,” “Industry,” “Faith”, and “Efficiency”, or “LIFE.” The columns now stand in the Sylvan Grove Theater.

20th century expansion

Organizers of the 1909 Alaska-Yukon-Pacific Exposition eyed the still largely undeveloped campus as a prime setting for their world’s fair. They came to an agreement with Washington’s Board of Regents that allowed them to use the campus grounds for the exposition, surrounding today’s Drumheller Fountain facing towards Mount Rainier. In exchange, organizers agreed Washington would take over the campus and its development after the fair’s conclusion. This arrangement led to a detailed site plan and several new buildings, prepared in part by John Charles Olmsted. The plan was later incorporated into the overall University of Washington campus master plan, permanently affecting the campus layout.

Both World Wars brought the military to campus, with certain facilities temporarily lent to the federal government. In spite of this, subsequent post-war periods were times of dramatic growth for the University. The period between the wars saw a significant expansion of the upper campus. Construction of the Liberal Arts Quadrangle, known to students as “The Quad,” began in 1916 and continued to 1939. The University’s architectural centerpiece, Suzzallo Library, was built in 1926 and expanded in 1935.

After World War II, further growth came with the G.I. Bill. Among the most important developments of this period was the opening of the School of Medicine in 1946, which is now consistently ranked as the top medical school in the United States. It would eventually lead to the University of Washington Medical Center, ranked by U.S. News and World Report as one of the top ten hospitals in the nation.

In 1942, all persons of Japanese ancestry in the Seattle area were forced into inland internment camps as part of Executive Order 9066 following the attack on Pearl Harbor. During this difficult time, university president Lee Paul Sieg took an active and sympathetic leadership role in advocating for and facilitating the transfer of Japanese American students to universities and colleges away from the Pacific Coast to help them avoid the mass incarceration. Nevertheless many Japanese American students and “soon-to-be” graduates were unable to transfer successfully in the short time window or receive diplomas before being incarcerated. It was only many years later that they would be recognized for their accomplishments during the University of Washington’s Long Journey Home ceremonial event that was held in May 2008.

From 1958 to 1973, the University of Washington saw a tremendous growth in student enrollment, its faculties and operating budget, and also its prestige under the leadership of Charles Odegaard. University of Washington student enrollment had more than doubled to 34,000 as the baby boom generation came of age. However, this era was also marked by high levels of student activism, as was the case at many American universities. Much of the unrest focused around civil rights and opposition to the Vietnam War. In response to anti-Vietnam War protests by the late 1960s, the University Safety and Security Division became the University of Washington Police Department.

Odegaard instituted a vision of building a “community of scholars”, convincing the Washington State legislatures to increase investment in the University. Washington senators, such as Henry M. Jackson and Warren G. Magnuson, also used their political clout to gather research funds for the University of Washington. The results included an increase in the operating budget from $37 million in 1958 to over $400 million in 1973, solidifying University of Washington as a top recipient of federal research funds in the United States. The establishment of technology giants such as Microsoft, Boeing and Amazon in the local area also proved to be highly influential in the University of Washington’s fortunes, not only improving graduate prospects but also helping to attract millions of dollars in university and research funding through its distinguished faculty and extensive alumni network.

21st century

In 1990, the University of Washington opened its additional campuses in Bothell and Tacoma. Although originally intended for students who have already completed two years of higher education, both schools have since become four-year universities with the authority to grant degrees. The first freshman classes at these campuses started in fall 2006. Today both Bothell and Tacoma also offer a selection of master’s degree programs.

In 2012, the University began exploring plans and governmental approval to expand the main Seattle campus, including significant increases in student housing, teaching facilities for the growing student body and faculty, as well as expanded public transit options. The University of Washington light rail station was completed in March 2015, connecting Seattle’s Capitol Hill neighborhood to the University of Washington Husky Stadium within five minutes of rail travel time. It offers a previously unavailable option of transportation into and out of the campus, designed specifically to reduce dependence on private vehicles, bicycles and local King County buses.

University of Washington has been listed as a “Public Ivy” in Greene’s Guides since 2001, and is an elected member of the American Association of Universities. Among the faculty by 2012, there have been 151 members of American Association for the Advancement of Science, 68 members of the National Academy of Sciences(US), 67 members of the American Academy of Arts and Sciences, 53 members of the National Academy of Medicine(US), 29 winners of the Presidential Early Career Award for Scientists and Engineers, 21 members of the National Academy of Engineering(US), 15 Howard Hughes Medical Institute Investigators, 15 MacArthur Fellows, 9 winners of the Gairdner Foundation International Award, 5 winners of the National Medal of Science, 7 Nobel Prize laureates, 5 winners of Albert Lasker Award for Clinical Medical Research, 4 members of the American Philosophical Society, 2 winners of the National Book Award, 2 winners of the National Medal of Arts, 2 Pulitzer Prize winners, 1 winner of the Fields Medal, and 1 member of the National Academy of Public Administration. Among UW students by 2012, there were 136 Fulbright Scholars, 35 Rhodes Scholars, 7 Marshall Scholars and 4 Gates Cambridge Scholars. UW is recognized as a top producer of Fulbright Scholars, ranking 2nd in the US in 2017.

The Academic Ranking of World Universities (ARWU) has consistently ranked University of Washington as one of the top 20 universities worldwide every year since its first release. In 2019, University of Washington ranked 14th worldwide out of 500 by the ARWU, 26th worldwide out of 981 in the Times Higher Education World University Rankings, and 28th worldwide out of 101 in the Times World Reputation Rankings. Meanwhile, QS World University Rankings ranked it 68th worldwide, out of over 900.

U.S. News & World Report ranked University of Washington 8th out of nearly 1,500 universities worldwide for 2021, with University of Washington’s undergraduate program tied for 58th among 389 national universities in the U.S. and tied for 19th among 209 public universities.

In 2019, it ranked 10th among the universities around the world by SCImago Institutions Rankings. In 2017, the Leiden Ranking, which focuses on science and the impact of scientific publications among the world’s 500 major universities, ranked University of Washington 12th globally and 5th in the U.S.

In 2019, Kiplinger Magazine’s review of “top college values” named University of Washington 5th for in-state students and 10th for out-of-state students among U.S. public colleges, and 84th overall out of 500 schools. In the Washington Monthly National University Rankings University of Washington was ranked 15th domestically in 2018, based on its contribution to the public good as measured by social mobility, research, and promoting public service.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/63891300/setiathome_20_getty_ringer_2.0.jpg)