SAP is the leading ERP solution across industries around the world. Data integration with other data platforms, applications, databases, and APIs is one of the hardest challenges in the IT and software landscape. This blog post explores how SAP Datasphere in conjunction with the data streaming platform Apache Kafka enables a reliable, scalable and open data fabric for connecting SAP business objects of ECC and S/4HANA ERP with other real-time, batch, or request-response interfaces.

What is SAP ERP?

SAP is a German multinational software corporation that develops enterprise software to manage business operations and customer relations. SAP is best known for its ERP (Enterprise Resource Planning) software, which helps organizations integrate and streamline their business processes.

A wide range of industries and companies of all sizes use it. SAP ERP is one of the most widely used ERP solutions globally. SAP is not a single product, like many people think. Over the years, SAP has expanded its product portfolio. It includes cloud-based solutions, analytics, database management, and other enterprise software applications.

SAP ECC, S/4HANA, and more ERP Products

SAP offers a range of ERP products that cater to different business needs and industries. Some of the key SAP ERP products include:

- SAP S/4HANA: SAP S/4HANA is the flagship ERP suite that represents the next generation of SAP’s ERP solutions. The product is built on the SAP HANA in-memory database and provides a simplified data model, improved user experience, and advanced analytics capabilities. It covers core business functions, such as finance, supply chain, manufacturing, procurement, and more.

- SAP ERP Central Component (ECC): ECC is the predecessor to SAP S/4HANA and is still widely used by many organizations. It includes various modules, such as SAP ERP Financials, SAP ERP Human Capital Management (HCM), SAP ERP Operations, and others.

- SAP Business ByDesign: This is a cloud-based ERP solution designed for small to medium-sized enterprises (SMEs). It integrates core business functions, such as financials, human resources, procurement, supply chain management, and customer relationship management (CRM).

- SAP Business One: Another ERP solution targeted at small and medium-sized businesses, SAP Business One is an integrated suite that covers areas such as accounting, sales, purchasing, inventory, and production.

- SAP S/4HANA Cloud: This is a cloud-based version of SAP S/4HANA, offering similar functionalities but with the advantages of cloud deployment, including scalability, accessibility, and reduced infrastructure management.

- SAP Business Suite: This is a set of business applications that includes SAP ERP and other related products. It comprises different modules to address various business processes.

- SAP All-in-One: This is an industry-specific version of SAP ERP designed for midsize companies. It provides pre-configured industry solutions for sectors such as manufacturing, retail, and healthcare.

This product list might be out of date when you read it. SAP continuously develops its product offerings. Products get new names from time to time, consolidate, or deprecate. In other words, SAP modernization, integration, and migration are usually an ongoing effort that never ends.

What is SAP Datasphere?

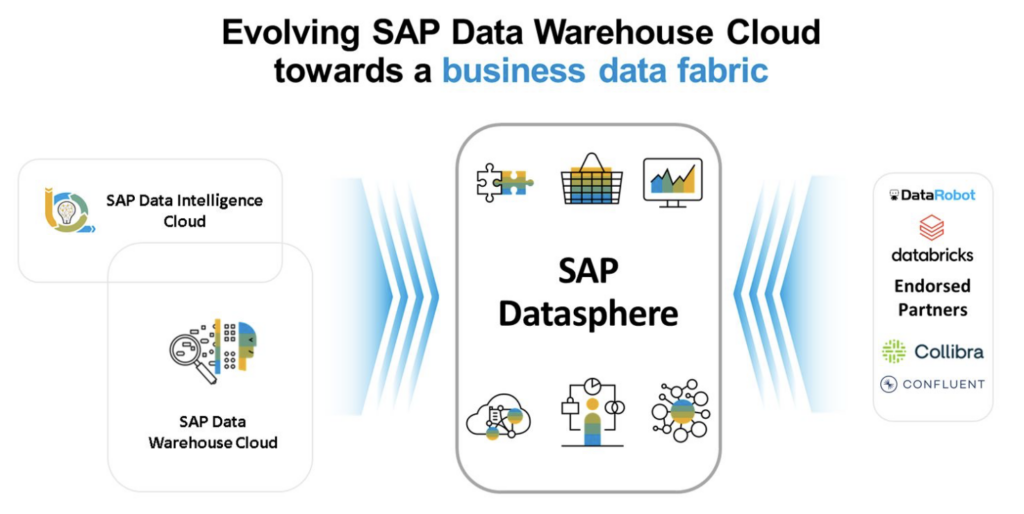

SAP Datasphere is the next generation of SAP Data Warehouse Cloud. The platform provides a comprehensive data service that enables data professionals to deliver seamless and scalable access to critical business data.

SAP Datasphere is a cloud-based product packaged within SAP’s Business Technology Platform (BTP). Datasphere brings together two previously standalone products, SAP Data Intelligence Cloud (DIC) and SAP Data Warehouse Cloud (DWC), into one cloud native, data integration, and data management platform. The solution allows SAP customers to ingest, integrate, store, and analyze core SAP ERP data, as well as to share this data with other analytical services and downstream applications.

SAP Datasphere = Cloud Data Warehouse and Analytics Platform

Datasphere is the core part of a new solution, known as Business Data Fabric, to simplify data integration and management involving SAP ERP backend data. A key focus of SAP Datasphere is business intelligence and analytics.

I see Datasphere similar to Snowflake or Databricks as a general data warehouse / data lake / lakehouse, but focusing on SAP data with deep integration into the SAP ERP ecosystem and surrounding applications.

However, the out-of-the-box availability of SAP ERP data from SAP ECC, S/4HANA, and other SAP apps enables a simple but powerful opportunity for data integration beyond the SAP landscape. No need to use legacy SAP protocols like BAPI or IDoc anymore. Instead, SAP Datasphere provides a unified way to discover, connect, and manage data across different data sources, systems, and landscapes.

Features of SAP Datasphere and Complementary Software Partnerships

The key features of SAP Datasphere include:

- Data Connectivity: SAP Datasphere enables organizations to connect to and access data from various sources, whether they are on-premises or in the cloud. It supports integration with different databases, data lakes, and other data repositories.

- Data Orchestration: The platform allows organizations to orchestrate data flows and processing across different data environments. This can be essential for managing complex data pipelines and ensuring data consistency and coherence.

- Data Governance: SAP Datasphere includes features for data governance, providing tools for managing metadata, ensuring data quality, and enforcing data policies across the distributed landscape.

- Unified Data Discovery: The platform offers a unified view of data assets, helping organizations discover and understand the available data resources across their entire landscape.

- Multi-Cloud and Edge Support: SAP Datasphere works in multi-cloud and edge computing environments, providing flexibility and scalability for organizations with diverse data storage and processing needs.

This sounds like any other data management platform, doesn’t it?

But the above features are focusing mainly on SAP environments. Therefore, Datasphere has a few strategic software partnerships:

- Confluent (data streaming)

- Databricks (data lakehouse)

- Collibra (data governance)

- Data Robot (automated machine learning)

This emphasizes the strength of Datasphere around the SAP ecosystem. The other partners connect non-SAP IT infrastructure and applications with SAP environments bidirectionally.

SAP Datasphere = One-Stop-Shop for Multi-Generation SAP ERP Systems

SAP Datasphere is more than just an analytical platform for SAP ERP data.

Datasphere leverages SAP internal tooling to access data directly from SAP systems. It is a complete data integration and analytics solution optimized for collecting and preparing data from all SAP ERP systems of multiple generations. For the first time in their history, SAP is making core ERP data from numerous back-end systems available in a one-stop-shop fashion through Datasphere.

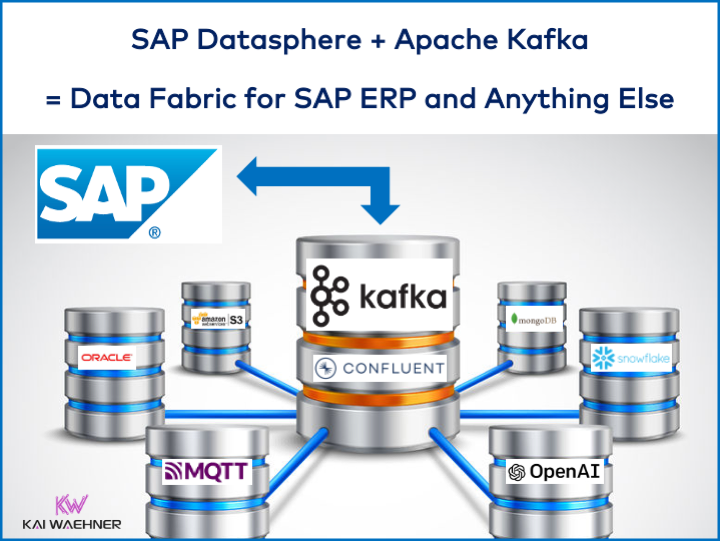

This brings us to the excellent opportunity of combining SAP business objects with Apache Kafka and the rest of the enterprise architecture.

Why Apache Kafka for SAP Integration?

Apache Kafka is a distributed streaming platform that has gained widespread popularity for its ability to handle large-scale, real-time data streaming and event processing. When it comes to SAP integration, there are several reasons organizations choose to use Apache Kafka:

- Real-time Data Streaming

- Apache Kafka is designed for real-time data streaming, making it well-suited for scenarios where timely and continuous data updates are crucial. This is important in SAP environments where real-time integration is essential for various business processes.

- Scalability

- Kafka is highly scalable and can handle large volumes of data and high-throughput requirements. SAP systems often handle massive amounts of data. Kafka’s scalability enables efficient management and processing of this data.

- Reliability and Fault Tolerance

- Kafka is known for its reliability and fault-tolerance features. It ensures data durability and availability, which is critical for critical applications like those in SAP environments, e.g., in finance or supply chain business processes. Features like rolling upgrades allow zero downtime continuously.

- Decoupling Systems

- Kafka facilitates the decoupling of systems by acting as an intermediary for data exchange. This decoupling allows SAP systems and other applications to communicate without being directly connected, leading to a more flexible and modular architecture. Kafka ensures data consistency across real-time and non-real-time systems.

- Event-Driven Architecture

- Kafka supports an event-driven architecture, which aligns well with modern integration patterns. The streaming platform efficiently propagates events, such as changes in SAP data or system events. This enables a more responsive and agile IT landscape. Kafka Connect enables integration with other plain messaging platforms like IBM MQ, TIBCO EMS, or Solace.

- Integration with Big Data Ecosystem

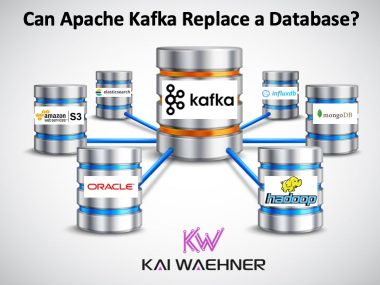

- Kafka integrates well with the broader big data ecosystem, including tools like Apache Hadoop, Apache Spark, Elasticsearch, MongoDB, and others. This can be beneficial for organizations looking to analyze and derive insights from SAP data in combination with other data sources and data sinks. Kafka is a much more flexible, scalable and reliable middleware compared to other data integration tools (including SAP middleware like SAP PI).

- Message Retention

- Kafka stores messages for a configurable period, allowing systems to catch up on missed messages in case of temporary disruptions. This is particularly useful in scenarios where SAP systems may be temporarily offline, unreachable, or cannot handle the throughput. Or if the transaction cost needs to be reduced by offloading the consumption of downstream applications to a cheaper platform like Kafka. Tiered Storage for Kafka is a significant change for long-term event store for ERP information.

- Support for Multiple Protocols

- The Kafka ecosystem supports various communication protocols (like Kafka, HTTP, File, WebSockets, and more), making it versatile for integration with different systems and technologies. This flexibility is crucial in heterogeneous IT environments, where SAP systems coexist with other technologies.

- Open Source Community and Ecosystem

- Kafka has a vibrant open-source community and a rich ecosystem of connectors and tools. This ecosystem can simplify integration efforts by providing pre-built connectors for SAP systems and other common technologies.

- Analytical and Operational Workloads

- Kafka was initially built for big data analytics use cases. However, most organizations leverage the technology for operational workloads, like orders or payments. Kafka evolved over the years and even introduced a transaction API for exactly-once semantics.

An ERP environment should be real-time, scalable, and open. SAP ERP is not just one product or technology. And organizations always combine it with other open source frameworks, proprietary standard software, and SaaS. “Building a Postmodern ERP with Apache Kafka” explores how SAP ERP and other technologies provide the most value together in a flexible, open environment. Many next-generation ERP systems use Kafka under the hood, too. Even if you don’t see it because it is a proprietary product or SaaS. But event-driven architectures are helpful for software products as they are for any other software projects.

Continuous SAP Migration and Cutover with Kafka

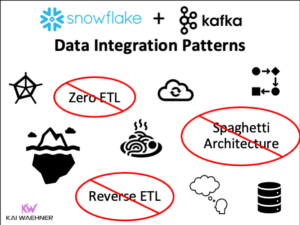

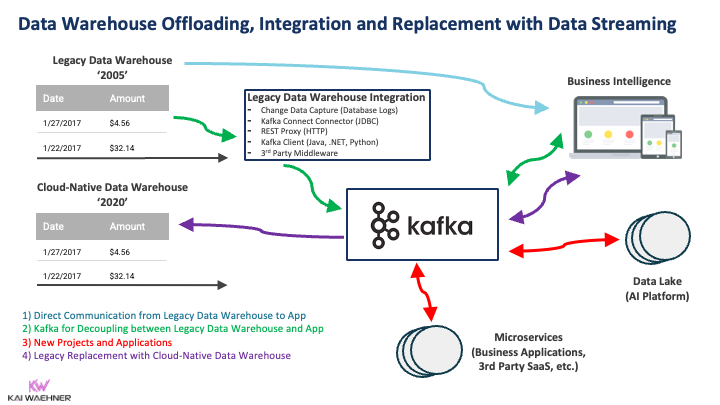

Integration between SAP ERP and other applications is crucial. Another kind of project is the migration and ERP modernization, e.g., from SAP ECC to S/4HANA or the migration between SAP and another software vendor.

A SAP migration project involves moving an SAP system or landscape from one environment to another. This could include moving from an on-premises environment to the cloud, upgrading to a newer version of SAP software, or consolidating multiple SAP instances. The exact steps and considerations for a SAP migration can vary based on the specific migration scenario.

Most SAP ERP migrations these days are from SAP ECC to SAP S/4Hana. These projects usually take years. Apache Kafka can provide valuable help in different SAP integration and migration scenarios.

The combination of real-time capabilities, an event storage for true decoupling and data consistency across real-time and non-real-time systems, and data integration with non-SAP systems and APIs make Kafka the perfect middleware for SAP modernization and ERP migrations.

I covered such a migration via Apache Kafka in a data warehouse modernization story where legacy and modern applications live in parallel for some months or even years until the final cutover is done.

Until the completion of the S/4Hana migration in the cloud, SAP ECC on-premise continues to exist for years. The hybrid deployment and synchronization capabilities of Kafka make it unique for SAP migration and modernization projects.

Confluent’s Fully Managed SAP Integration and Strategic Partnership

Data streaming defines a new software category. Confluent leads the data streaming industry. It provides a serverless cloud offering on all major public clouds and an offering for self-managed deployments powered by Apache Kafka and Flink. In December 2013, the research company Forrester published “The Forrester Wave™: Streaming Data Platforms, Q4 2023“. Get free access to the report here. The report explores what Confluent and other vendors like AWS, Microsoft, Google, Oracle and Clouders provide.

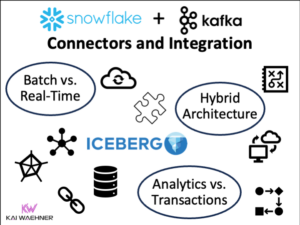

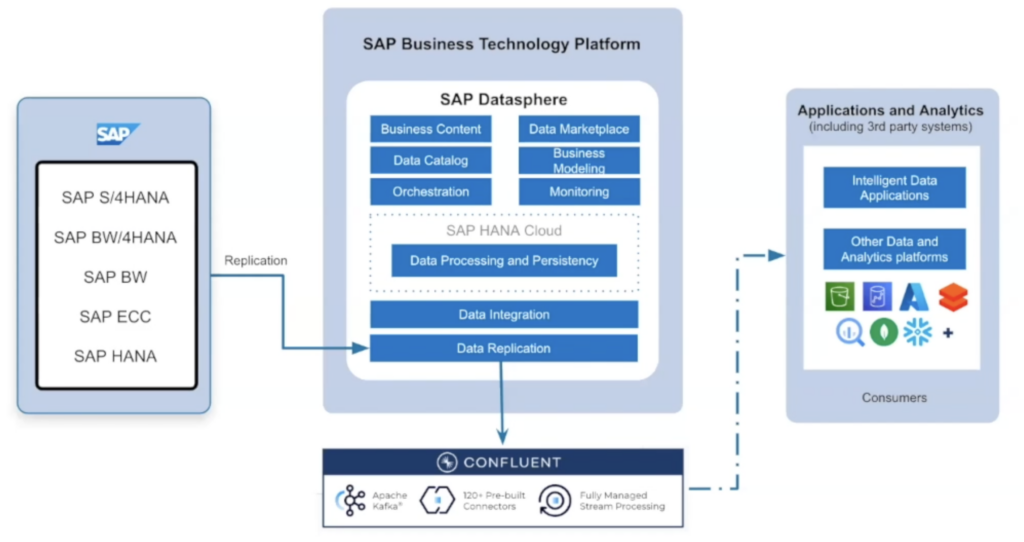

Confluent is now available in the SAP® Store, the online marketplace for SAP and partner offerings. The data streaming platform integrates with SAP Datasphere. The combination delivers a secure, governed solution for accessing SAP data as fully managed data streams for customers.

Confluent provides businesses that use SAP solutions with a cloud-native and complete data streaming platform available everywhere it’s needed – in the cloud, across clouds, on-premises, and hybrid environments. Configured directly within SAP Datasphere, the new Confluent integration allows businesses to:

- Build real-time applications at a lower cost with fully managed data streams powered by Confluent’s Kora Engine, which reduces the total cost of ownership for Kafka by up to 60%.

- Move SAP data anywhere it needs to go. Merge with third-party sources in real time via many pre-built connectors, including AWS Redshift, AWS S3, Databricks, Google Cloud BigQuery, MongoDB, and Snowflake paired with a serverless offering for Apache Flink.

- Maintain strict security, compliance, and governance standards with enterprise-grade data streaming security controls, and the industry’s only fully managed governance suite for Kafka.

Confluent in the SAP PartnerEdge Program

Confluent is a partner in the SAP PartnerEdge program. The SAP PartnerEdge program provides the enablement tools, benefits and support to facilitate building high-quality, innovative applications focused on specific business needs – quickly and cost-effective.

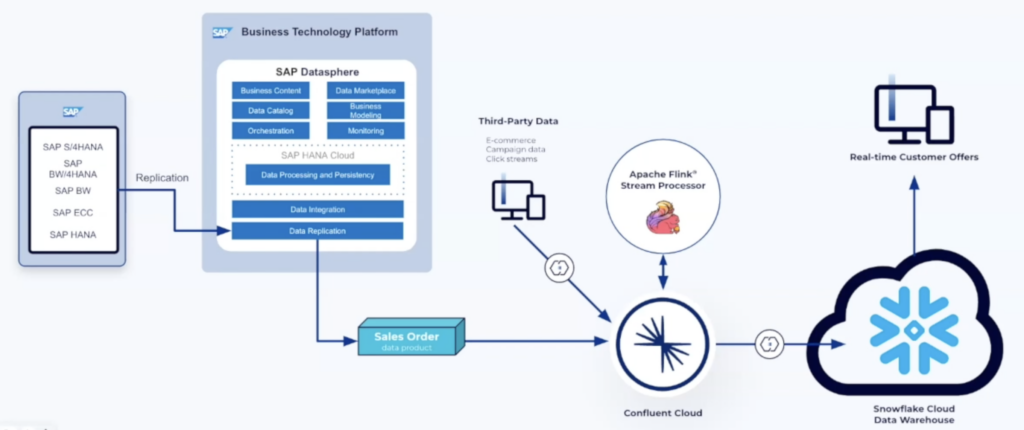

Here is an example architecture connecting SAP ERP and non-SAP applications (Flink and Snowflake in this example) with Datasphere and Confluent:

Confluent and SAP Datasphere are the perfect combination for building a data fabric for all enterprise data. Like many companies leverage Apache Kafka as data fabric for AI and Machine Learning.

Alternative Integration Options for SAP and Kafka

Is SAP Datasphere the new silver bullet for SAP ERP integration scenarios? No! As you learned in the above sections, Datasphere enables easy access to old and new SAP ERP data objects. However, Datasphere might have some drawbacks, too:

- New technology: The product is only available for a few months at the time of writing this blog post in early 2024. It will mature and features will strengthen.

- Heavyweight: A direct integration with a proprietary SAP API call, e.g., BAPI, IDoc or the more modern Operational Data Provisioning (ODP) might be easier to implement and more cost-efficient from a TCO perspective for some projects.

- Vendor lock-in: Choosing a SAP product as middleware and/or analytics platform might not be the right strategy. Many organizations choose a best-of-breed approach for different domains and use cases instead of relying on a single vendor from a technology and licensing perspective.

One solution does not fit all integration use cases. Know the different options and make your evaluation.

Plenty of other options exist for SAP-Kafka integration. I explored tens of APIs, tools, and connectors for data integration between SAP ERP and Apache Kafka.

For instance, look at the Confluent Hub and search for SAP Kafka integration. You will find many mature, lightweight and innovative solutions from various vendors. For instance, INIT, Asapio, Advantco, KaTe, Onibex, and Qlik provide integrations via different open and proprietary SAP interfaces like ODB, OData, REST, BAPI, or iDoc.

SAP Datasphere and Kafka Connect the Entire Enterprise (and Hybrid Cloud)

It was never easier to integrate the SAP ecosystem with the rest of the IT world in an enterprise architecture. SAP Datasphere supports straightforward access to SAP S/4 HANA, SAP BW/4HANA, SAP BW, SAP ECC, and SAP HANA ERP data without the need for complex integration projects. In addition, SAP supports connectivity to Business Warehouse, SAP’s on-premise data warehouse solution.

Apache Kafka enables data consistency across SAP and non-SAP applications across the data center and public cloud. No matter if the data source or sink is real time, near-real-time, batch, file-based, or a rest-response API like HTTP/REST. The heart of the data fabric is event-based, scalable, and reliable.

Confluent is the leading vendor of data streaming technologies like Apache Kafka. The strategic partnership and deep product integration between SAP Datasphere and Confluent provides an excellent opportunity for any organization that needs to integrate SAP and the rest of the IT infrastructure.

Some people might tell you how great Kafka is for analytical use cases. But not suited for operational, critical use cases (because some folks want to pitch another product for SAP integrations). That’s not accurate. Apache Kafka supports analytical AND transactional workloads. Actually, almost all customers I work with around the world use Confluent for transactional data from the SAP ERP for orders, payments, fraud detection, and similar operational use cases.

How do you integrate with your SAP systems today? Do you already use modern technologies like Apache Kafka? What connectors or solutions do you use? Will you use SAP Datasphere in the future? Let’s connect on LinkedIn and discuss it! Stay informed about new blog posts by subscribing to my newsletter.