Math Inside Neural Network

Neural network is mathematical computational model, inspired from the inter connected neurons in the brain which send signals from one part of the brain to the other part of the brain.One similarity between the neural network and brain is that both learn from the experiences, apart from these similarities of connectivity and learning from experience, there is hardly any more correlation between them. Typically this mathematical model is used to solve the problems of fuzzy logic where relations between the inputs and outputs of the problem is difficult to establish or does not exists and instead the past data is used to establish this learning than doing the programming logic for same. Neural networks has been in existence from past several years but recently they have become more popular as data collection in recent years are increased and also the computation powers to compute or act upon these data.

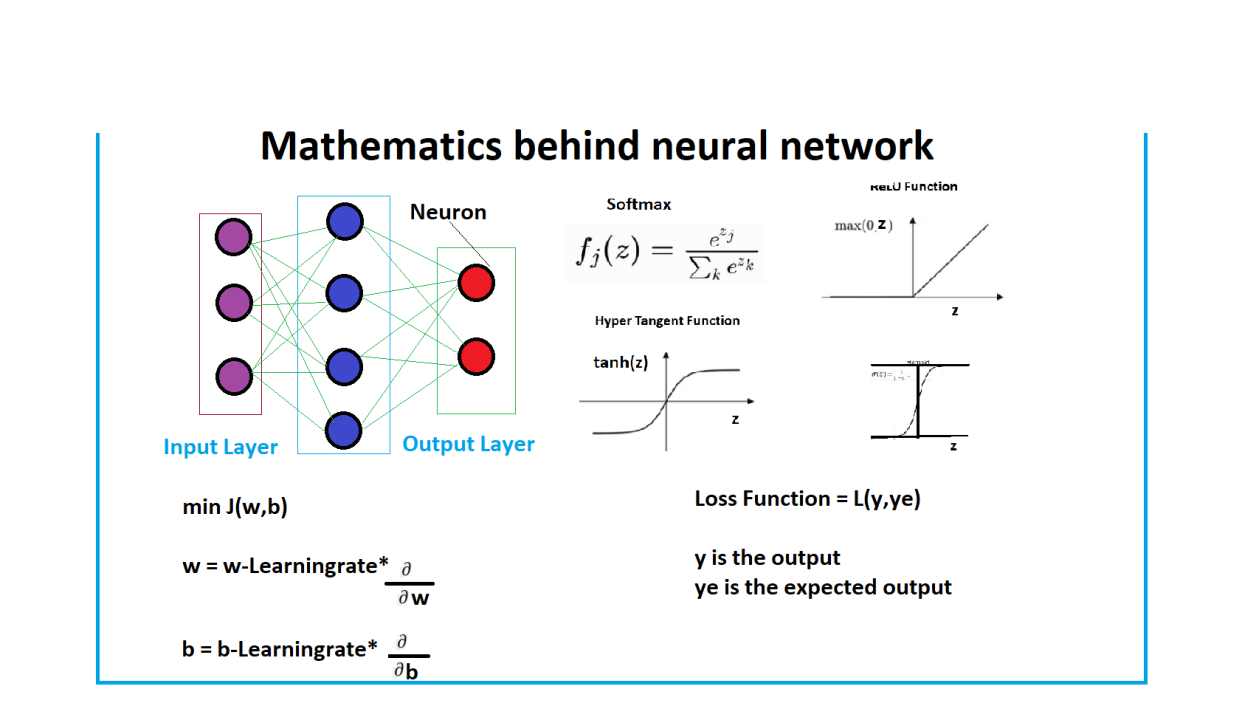

A typical neural network has input layer with multiple inputs, some hidden layers with hidden units and output layer with multiple outputs. The example of the neural network representation is shown below.

It is important to understand what we are trying to compute from this mathematical computation model of the neural network. A typical usage of the neural network is to make it learn on the label data or otherwise called as super vised learning. In supervised learning, we are typically given a set of inputs and corresponding set of outputs, we give these two corresponding data set to make neural network learn from this. But the question still remains is what it learns or what parameters or properties of the neural network are set as part of this learning process.These learning parameters are called as "weights" and "Bias", weights are the real numbers on the interconnecting arrows and bias is real number associated with each neuron. The weights of the connecting arrows typically represent how much a typical input has weight-age on the outputs of the neural network.

Let us take a neuron and understand what is the mathematics is going on. A typical neuron is shown below and further different activation functions are shown.

Let us look closely above two figures, at each neuron a linear computation of z(x)=sum of weighted inputs + bias number and then activation function on this z(x).

- z(x) = sum of weighted inputs + bias number (w1x1+w2x2+w3x3+w4x4 + b)

- Activation of (z(x)) = g(z(x))

The reasons activation function are needed is to establish non-linear relationships between the inputs and output of neuron ( output of neuron here and not complete neural network) and also confine the output of neuron between the limits of the activation functions for ease of understanding and computing the results.

Once the neuron inputs and its output is understood in layer 'L', the outputs of all neurons from this layer 'L' are propagated further in the downstream neurons in next layer 'L+1' as inputs and so on till the activation are computed out of the units of the output layer. The whole of this process is called as the 'Forward Propagation'.

Now we have reached to the final or the output layer and calculated the activation of the output units, what next to do now ? The outputs from these output units are compared with the expected outputs(remember we know from the data what are the expected outputs as we are training from the past data) and the overall loss is calculated using the loss function. A typical loss function will look like below.

This loss function is function of 'weight' and 'bias' parameters of the neural network and the whole objective is to minimize the value of this function or in other words, come up with the values of different weights and bias(es) so that the difference between the outputs and the expected outputs is minimized and this is the way the neural network trains. The goal is to hit the lowest point in the below graph to achieve minimum value for the given weights and the bias, different perspectives or views are shown for the same. The idea is to move towards the slope which will lead to the minimum point on the graph and technically this is known as 'Gradient descent' which mean move towards the decreasing gradient.

Once the Gradient Descent is found, now the only thing remaining is to apply this gradient descent to the parameters 'w' and 'b' to calculate new values of the parameters of 'w' and 'b' for each of the units and layers backwards. This is the reason this step is known as 'backward propagation' of the learning. The calculus of the derivatives of w and b is shown below, which gives the new values of parameters of w and b.

The above calculations of forward and backward is repeated over multiple training iterations over multiple training examples to make neural network learn. Learning rate in the above equation signifies how fast or slow we need to make it learn and its value is carefully chosen by trial and error to arrive at the best results for the given problem.

As you can see there are other parameters like number of layers, number of units, which activation functions to choose and so on which also impact neural network learning for the given problem, the details of these 'hyper parameters' how these are chosen are not discussed here but these very well impact the learning for different cases. The idea behind this article was to ' Train to Train the neural network' and understand basic mathematics behind it.

Associate Director ☑20+yrs ☑ Data Science and AI ☑ HealthCare☑ Follow

3ySuper

PMC Role SmartPlant Specialist (IM)- Qatar Energy LNG Major Projects Group

3yOne of the simplest and low level explaination and easy to understand article for a complex topic.Highly appreciated sir.